If you’re familiar at all with Varnish, you know it improves content delivery performance by storing a copy of your content in cache, and every request thereafter is fulfilled by the cached content.

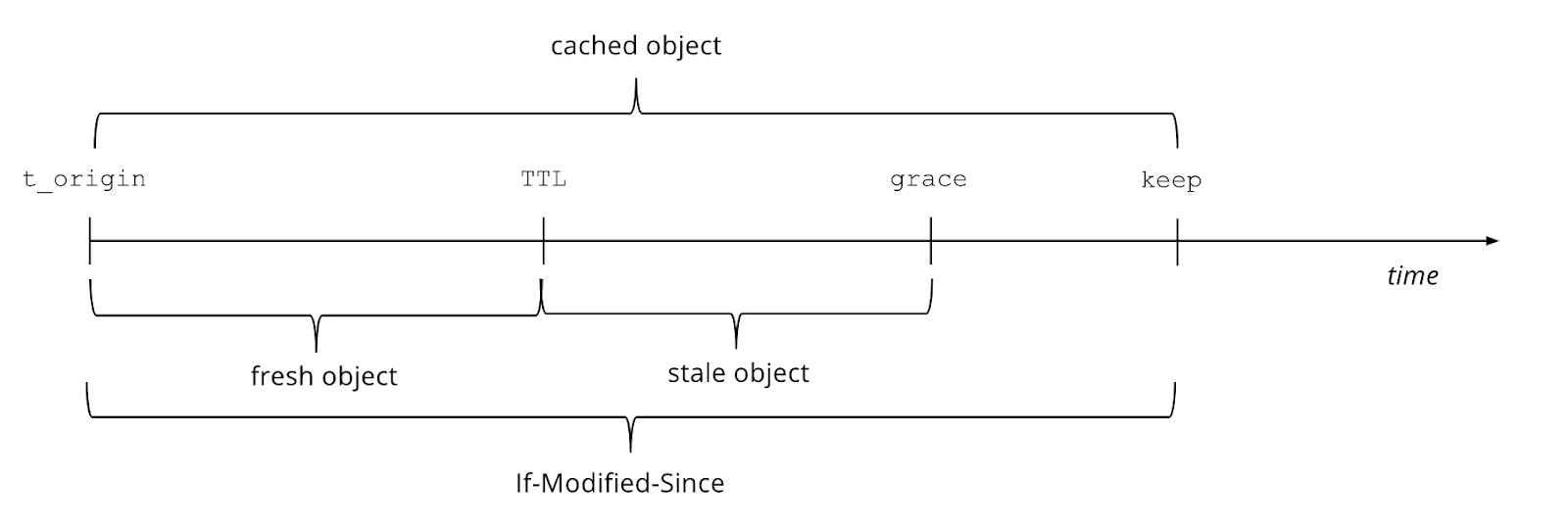

Every copy of the content (aka object) stored in cache comes with a time to live (TTL), which defines how long an object can be considered fresh, or live, within the cache.

On top of the defined TTL we can add a “grace” period, which will be used to trigger the stale-while-revalidate behavior. Eventually a “keep” period can also be set to implement conditional requests.

While “Grace” and “Keep” both play important roles in providing all-the-time content availability, the ultimate goal of a cache is to make sure it can serve the most up-to-date version of a piece of content. This makes it fundamental to caching to understand how to set and/or override a TTL.

How does Varnish handle TTLs?

Varnish, when fetching the content to be cached, will first check if any TTL-related header has been set by the origin server. If it has been set by the origin server, then Varnish will honor it, but we could still change it via VCL if we need to apply a modification.

If no TTLs have been set by the origin server, then Varnish will apply its own default TTL.

Again, regardless of whether the TTL is defined by the origin server or by using the default Varnish TTL, we can always change it using VCL.

How do we set or override the TTL?

- Via HTTP headers:

The best practice in this case is to assign a TTL to each newly generated piece of content; therefore, you should set the Cache-Control headers at the origin server level.

Cache control headers include anything defining whether the content can be cached or not, and if it can be cached, those headers also state for how long.

Chapter 5 of the caching RFC lists all the caching-related headers.

- Via VCL:

If you decide to go for the VCL approach, then it is important to know that TTLs should be set while the new object is being inserted in cache, and this is what happens in the vcl_backend_response subroutine.

More generally, vcl_backend_response represents the last state where you can still apply caching rules before the content is inserted in cache and is therefore unchangeable.

My suggestion is always to set a default value, which may be good for most of your content, and only afterwards fine tune your TTLs for specific objects.

For example, if we are caching video content, we will most likely have both live videos and VoD content and perhaps also a few static assets. Among the three, live content is the most time sensitive as we need to stream it as soon as the consumer requires it, and we must stream it with the lowest latency possible, but it is also the one which expires sooner than the others because once it is consumed it’s not live content any more by definition.

For live content we will set a very low TTL, while for VoD the TTL will be slightly higher (VoD content doesn’t really change over time), and we can definitely bump it for static assets, which are unlikely to change often.

Here’s how the VCL would look:

Sub vcl_backend_response {

# by default we set a very low TTL to make sure live content is cached for

#just about the right amount of time

set beresp.ttl = 3s;

# if the content is VoD then we bump the TTL

If (bereq.url == “/VoD”) {

beresp.ttl = 10s;

}

# if the content is static we cache for 4 weeks

If (bereq.url == “txt”) {

beresp.ttl = 4w;

}

}

Of course you can adjust the above VCL snippet to your needs.

- Via varnishadm

Let's say neither your origin server nor Varnish have set a TTL, the fallback option that kicks in in this case is the default TTL set by Varnish as defined by varnishadm (read manager process).

Typing “varnishadm param.show” from your command line will show you the various parameters used to define the Varnish behavior, among those you can also find the default_ttl one, which is set to 120 seconds, meaning that every object will be assigned a two-minute TTL.

You can change this default behavior by setting the default_ttl parameter to your desired value.

No matter which of the three options you decide to implement, each will work just fine. You will only need to make sure the TTL strategy you wish to implement is correct for your use case and even if you set a wrong TTL, do not despair as we also have very good cache invalidation strategies.

.png)