Varnish was initially made for website acceleration. We started out using a memory-mapped file to store objects in. It had some problems associated with it and was replaced with a storage engine that relied on malloc to store content. While it usually performed better than the memory-mapped files performance suffered as the content grew past the limitations imposed by physical memory.

Most websites are fine with this. Their content is measured in gigabytes and a server with 256GB of RAM isn’t as costly as it once was. However, some sites had content that grew into the terabytes or even petabytes. Varnish didn’t perform very well in these situations.

A year ago we started working on a new storage engine. These kinds of long-term projects feel pretty risky. You make a bunch of assumptions and then you go and spend a year before you see if your assumptions were correct.

Assumptions

-

Using write() instead of implicitly writing to a memory map would lead to better performance.

-

We had an idea for an allocation approach that was resilient to fragmentation. Fragmentation can degrade the performance of the file backend over time.

-

The somewhat simplistic LFU eviction algorithm used in Varnish can be replaced, increasing cache hit rates.

In late September the code was feature complete and we could run benchmarks. I ran the first benchmarks on my laptop in a virtual machine. The benchmarks were very simple - I would just force Varnish to use the storage a lot by having a big dataset and little memory available to the VM. We saw a significant performance increase, somewhere in the 20-30% range. This was excellent news and we knew we were on the right track.

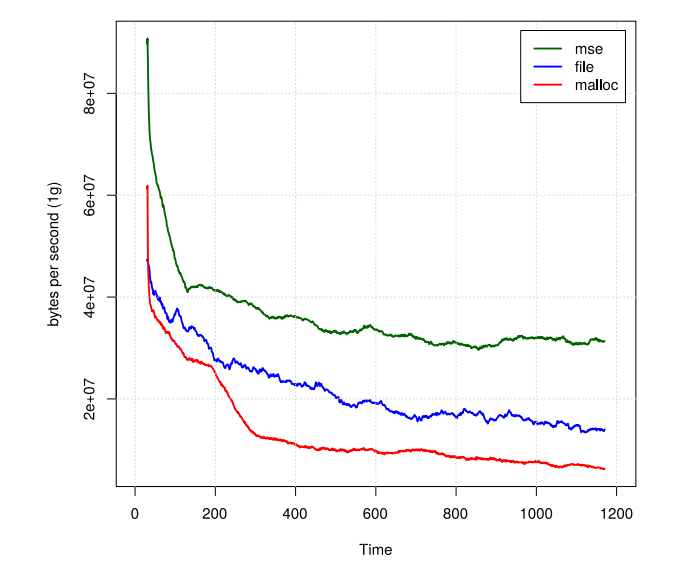

Martin spent quite a bit of time devising performance tests that were a bit more realistic. We needed tests that were able to measure the performance of our code in a reproducible and quick manner. The tests were done a VM with one gigabyte of memory, a two gigabyte file for storage on a four-disk raid set using regular hard drives. Hard drives have somewhat limited IO capacity and this exaggerates the performance characteristics of a more modern storage system.

As you can see we’ve managed to outperform both file and malloc with a significant margin. Malloc suffers quite a bit here as the swap performance on Linux is abysmally bad.

Design and implementation

Let’s go through the implementation and see what we’ve done.

Replacing the current LRU-based eviction algorithm.

The Varnish Massive Storage Engine comes with a cache eviction algorithm. Currently Varnish Cache uses LRU. When the cache is full and Varnish needs more storage it will look for the object in cache that hasn’t been touched for the longest time. That object will be evicted, making room for new objects.

The weakness of this algorithm is that it doesn’t distinguish between an object that has been accessed once during the last hour and one that has been accessed ten thousand times. An object will be moved from the absolute back of the line to the absolute front of the line as the result of a single request.

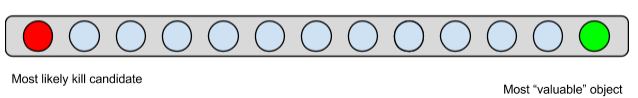

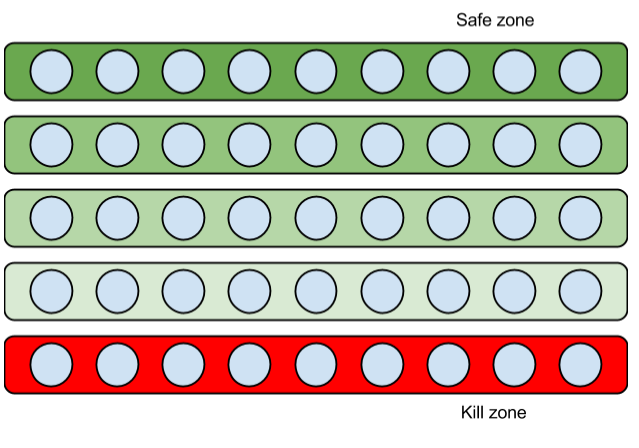

Varnish Massive Storage Engine uses ‘Least Frequently Used/Least Recently Used Hybrid’ cache eviction algorithm providing smarter selection criteria in the cache. Objects are placed in tiers–the “bottom” tier holds candidates in queue for purging when space is needed. The higher up the object resides, the lower the chance of purging.

Each ‘hit’ an object receives causes it to move up a tier. So, multiple access is required to move the object away from the “kill zone”.

We expect this to a have a positive effect on cache hit rates. The cache hit rates will of course depend a lot on actual traffic patterns and will vary. The implementation is pretty close to some very well-regarded cache eviction algorithms and we're certain it will add increased performance under most workloads.

The algorithm is similar to that used in the Linux kernel page cache and there is lots of research published on the performance of this algorithm.

Segmented layout

The Varnish Massive Storage Engine splits the available storage space into segments. When a thread needs to allocate storage it is granted an exclusive lock on the segments. This makes sure that the thread freeing space is guaranteed success, eliminating storage exhaustion problems that have occurred in previous versions of Varnish.

Since there are multiple segments available for allocation lock contention in allocation is kept to a minimum.

Multiple allocations done by the same thread are likely to end up in the same segment thereby increasing the likelihood of being placed physically close to each other on rotating media.

On hard drives it would be important to keep external fragmentation of the storage to a minimum. One can reduce the chance of external fragmentation by pre-allocating the storage with dd(1).

Blocks are buffered internally so all writes to the storage systems happen in complete blocks, removing the implicit read call needed to merge blocks together.

Eliminating internal fragmentation

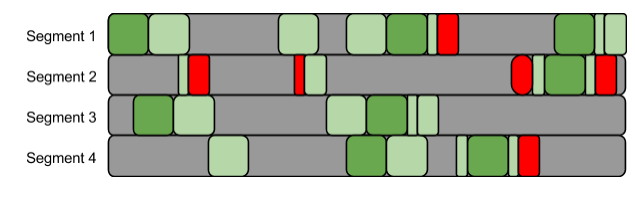

From earlier we’ve known that if using the file backend and if Varnish is kept running for a long time, with a nearly full cache, the cache will start to suffer from fragmentation. It starts looking like Swiss cheese. Lots of little holes are scattered all over the cache, making allocation of free space time consuming and inefficient.

In other software, techniques for fighting fragmentation are quite tedious. Taking advantage of the fact that Varnish is a cache and letting it actually eliminate objects that are blocking new allocations simplifies the allocation process. After all, an object that gets eliminated can be re-fetched from the backend if it is re-requested. This can keep overall performance high and greatly reduces complexity in the algorithms used.

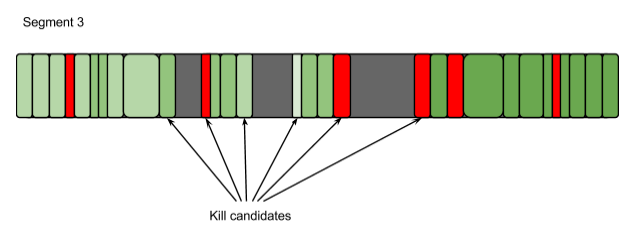

The Varnish Massive Storage Engine eliminates fragmentation by selectively taking out objects that are placed adjacent to each other. It will look for holes in the current cache and evaluate the objects adjacent to the hole. If it needs kill those objects it will do so, making room in the cache. These objects are then reserved for the thread that needs them, making sure that the threads that free up space actually manage to do so.

When picking objects for elimination, the Varnish Massive Storage Engine will prefer objects that are not frequently used. The more frequently the object is accessed the less likely it is to be evicted from cache.

Keeping the good stuff

Varnish Plus with the Varnish Massive Storage Engine is, at its core, the same Varnish that you know. The built-in load balancer, request collapsing, ESI, gzip and support for conditional requests are all still there.

Supporting three-tier caching

When presenting the Massive Storage Engine we were asked by some CDNs if it was possible to support a three-tier caching hierarchy. Physical memory for the most commonly accessed objects, solid state drives for the objects that were somewhat less frequently accessed and hard disks for the long tail content. Hard disks are still the cheapest form of storage there is, and for a library with petabytes of content you want your caches to be as large as possible in order to keep transit costs low and performance high.

Since MSE takes great care not to fragment more than it needs to it performs very well on hard drives. In order to support this we needed to find a way to move objects between hard drives and flash drives. Doing this explicitly in Varnish would lead to the kernel getting the wrong idea about what areas of the disk are accessed more frequently than others.

We had been keeping an eye on dmcache and bcache for a while and we've thought this was the perfect way to implement this. So, when using a hard drive / solid state drive combination we tried using MSE on top of a filesystem accelerated with dmcache. The results were very uplifting.

Availability

The Massive Storage Engine is a feature that is exclusive to Varnish Plus. Some of the code will most likely make it into Varnish Cache itself at some point, mainly the LFU and the fragmentation avoidance, but the disk storage will be kept as a Varnish Plus exclusive.

If you want to evaluate MSE I encourage you to send an email to our sales organization and they will set you up with a trial account. In addition to MSE, this trial will give you access to other great features, such as the Varnish Administraion Console, the Varnish Statistics Engine, the Varnish Tuner and other useful stuff.