Throughout the last couple of years live streaming has boomed, mostly helped by the rise of new technologies, i.e. the cloud, which made live streaming cheaper, more performant and more reliable.

Delivering live content requires a robust and performant architecture to make sure the final user has an excellent experience, especially because numerous competitors are appearing on the scene.

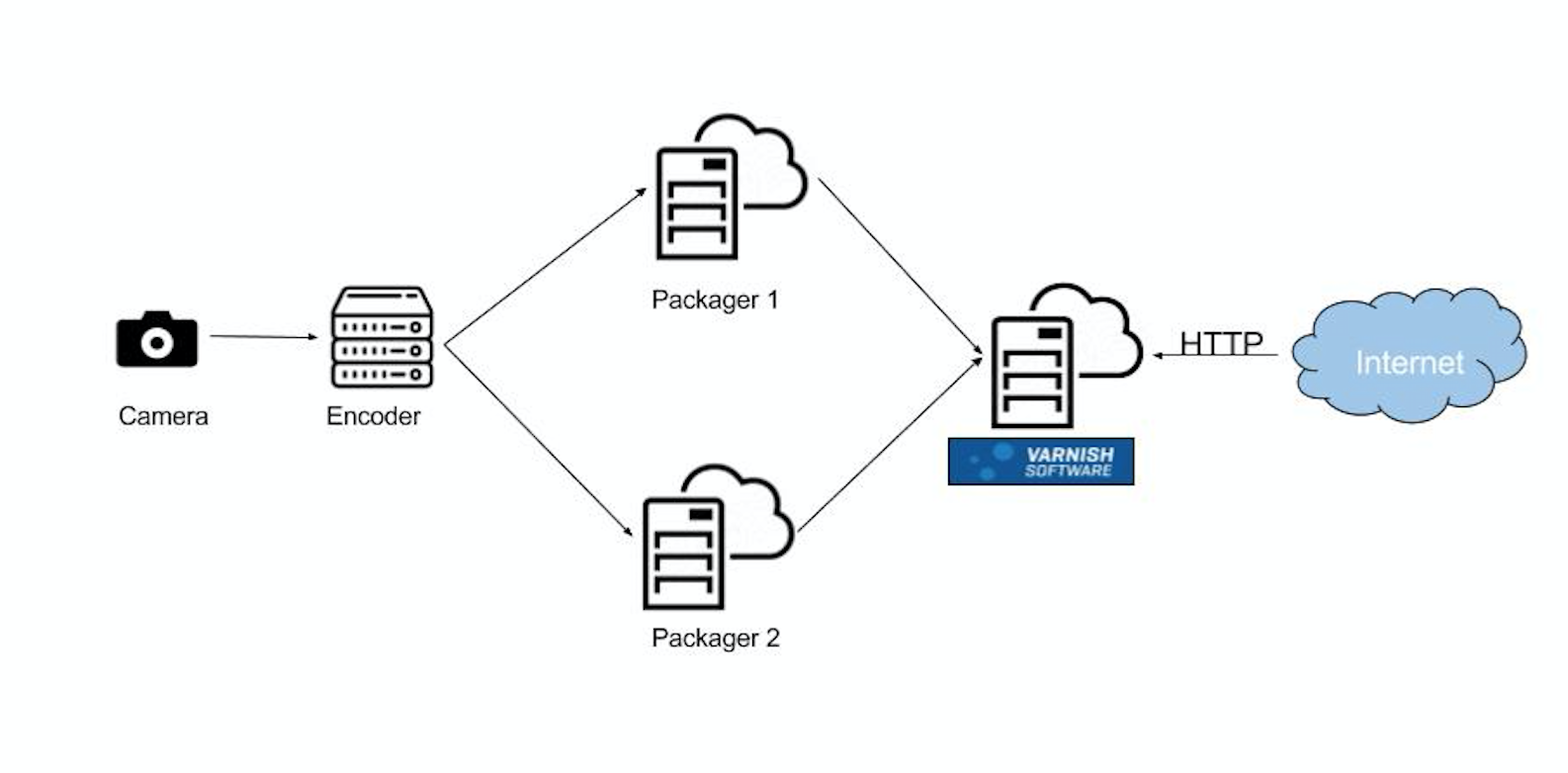

The flexibility Varnish is known for makes it a perfect fit for different use cases. In a streaming scenario (see the image to understand how the average architecture looks), Varnish should be implemented as close as possible to the final user, where it acts as cache and at the same time protects the underlying layers...

As you can see the idea is very simple: a camera records the video; this video is then processed and finally it is distributed. Varnish is a must-have for anyone delivering digital content because it is flexible, reliable and, of course, performant.

Now that we have understood where to place Varnish when delivering live content (please note that the same is true for other types of video and audio content, such as VoD and OTT. We are not going to cover those two specific topics directly in this blog post, but the coming tips can also be used in such contexts).

SOOO...What’s a stream?

A stream is a flow of data made available over time. One of the classic examples of live streaming is a live sport events, such as the final of the World Series of baseball, or the upcoming Super Bowl, which is expected to be watched by more than 100 million viewers.

The challenge of live streaming is that the content to be shared with the consumer is live, hence there are time-bound limitations and obligations to be respected (nobody can put in cache content that hasn’t been created yet! If the football game is at minute 10’, there is no way to have minute 11’ in cache: it hasn’t happened yet). At the same time the live stream need to keep users happy and satisfied with the service.

When caching live content it is important to consider: every viewer is watching exactly the same point of the video, which results in the same video segment being requested by every user at the same time. Varnish can handle this very well, using request coalescing as a single backend fetch will be triggered, protecting the backend from strain while at the same time delivering content to the synchronized clients.

By default, Varnish also offers a streaming mode that transfers the response to the client while it’s being fetched from the backend.

Another important feature to be considered is prefetching. Here we are talking about asynch request (fire and forget), which can be used to prewarm Varnish with the next video segment.

This can be now achieved through VCL, using the vmod-http.

The VCL looks like this:

vcl 4.0;import http;

sub vcl_recv{

if (req.url ~"^/live/") {

http.init(0);

http.req_copy_headers(0);

http.req_set_method(0,"HEAD");

http.req_set_url(0, http.prefetch_next_url()); http.req_send_and_finish(0);

}

}

We check if the requested URL is indeed for live content, and if it matches the regular expression then we:

- Initialize the new HTTP request, which will be used to prefetch the next segment

- Copy the request headers in the new HTTP request we have initialized

- Set the request method to HEAD

- Set the URL to be prefetched: we generate the next URL by incrementing the first number sequence found and send it back to Vanish. If no number sequence is found, the request is skipped

- Finally we send the request for the next segment.

With barely six lines of VCL you can keep your cache warm with the next video segment and make sure your cache is always warm and ready to deliver content.

If you wish to learn more about live streaming, register to join us for our upcoming webinar, Six secrets to successful live streaming.