"Can Varnish go to the backend and check the content freshness. FOR.EVERY.SINGLE.REQUEST?"

Sometimes, someone wants to do something unusual with Varnish, and I tend to reply like a true developer with: "Let me explain to you why you don't need it". The problem with this is that we don't operate in an ideal world, and most of the time, architecture isn't dictated by what should be done, but by what must work NOW! (Here's a depressing read, if you need one.)

I'm telling you this because in this post, we are going to solve a problem in a twisted way, but given your specific requirements, it may be the best way. And along the way, I obviously won't resist the urge to explain why it's twisted and what the other solutions are, but feel free to skip those parts.

The issue, and the issue with issue

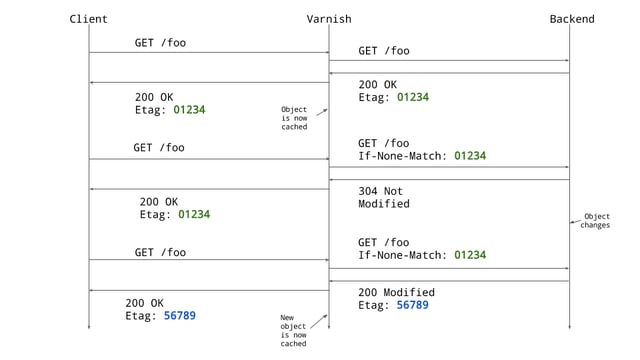

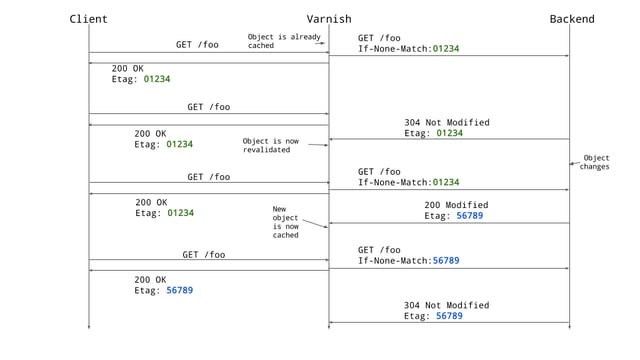

The Etag header is a opaque string, returned by the server, that should uniquely identify a version of an HTTP object. It's used for content validation, allowing the client to ask the server: "I have this version of the object, please either tell me it's still valid or send me the new object".

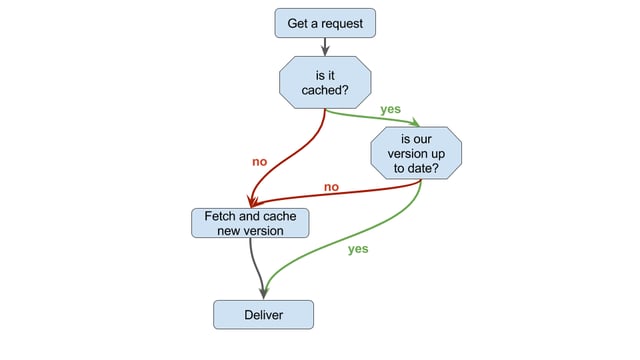

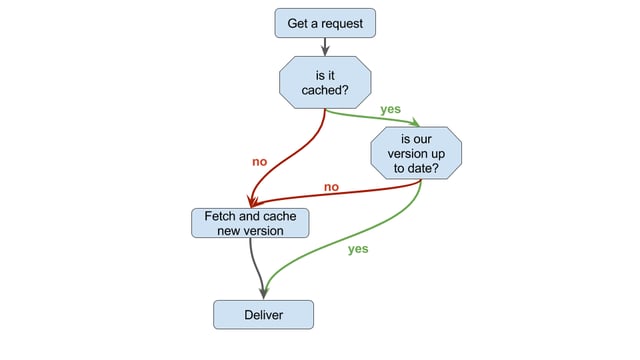

What we would like is for Varnish to leverage this Etag header and systematically ask the backend if the object we are about to deliver is fresh, something along those lines:

I have a conceptual problem with this: it goes against the cache's goal of shielding your server. With the proposed setup, we have to bother the backend for every request, even if using 304 responses, or something similar, will largely reduce the load since they have no body.

And there's also a functional problem: it's synchronous, so if your backend falls or slows down, your users will suffer delays, which is, again, against the idea of a caching layer.

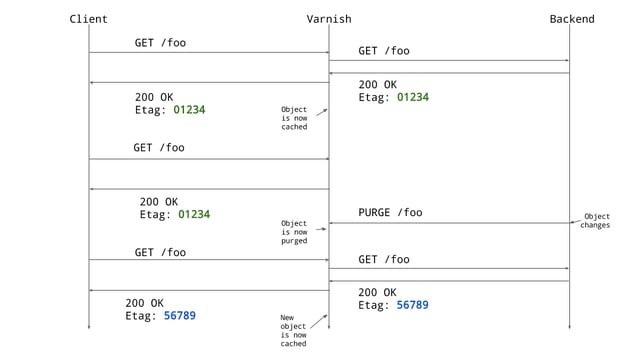

The purge, actually a good movie solution

We need to backtrack a bit here, and think about what our issue really is. It's not that we want to go to the backend all the time, but rather that we don't want to deliver outdated content - a commendable goal. And the backend knows about content freshness, so it's definitely the right source of information, but what if, instead of asking it, we could make it tell us directly when something changes?

Using purges, bans or xkey, it's possible to remove content from the cache, based on URL, regex or semantic tags. If your backend is able to trigger an HTTP request when its content changes, you're good to go! A lot of CMS do it, such as eZ Publish, WordPress or Magento, among others.

With this, you can set your TTLs to weeks or months to ensure super high hit-ratios, almost never fetching from the backend, and still deliver up-to-date content, so this seems to be the perfect solution. So why isn't everybody using it?

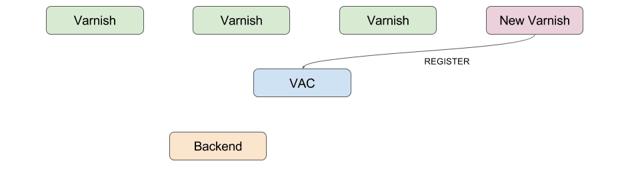

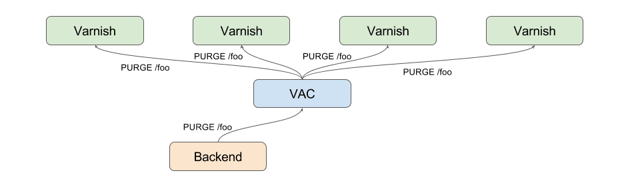

The first thing is that the backend needs to be aware of all the Varnish caches to purge, obviously. This generally means that adding a new Varnish server triggers a reconfiguration of the backend. Using the Varnish Administration Console (and the bundled Super Fast Purger) this becomes a non-issue because new Varnish servers will register to it when created, allowing the VAC to know about all the caches:

Then, instead of having the backend purging the Varnish boxes directly, it sends only one purge request to the VAC, that will broadcast it:

Then, instead of having the backend purging the Varnish boxes directly, it sends only one purge request to the VAC, that will broadcast it:

If your backend supports it, this is definitely the best option.

If your backend supports it, this is definitely the best option.

Saving grace

But your backend may not be able to trigger those HTTP requests, or you may not have access to said backend, in this case, the previous solution is out of your reach, sadly.

Fret not though, I do have another choice to offer you, and it doesn't involve cache invalidation; it's actually super simple:

sub vcl_backend_response {

set beresp.ttl = 0s; /* set ttl to 0 second */

set beresp.grace = 1d; /* set grace to 1 day */

set beresp.keep = 1d; /* keep the content for 1 extra day

* allowing content revalidation */

return (deliver); /* bypass builtin.vcl,

* avoiding Hit-for-Pass */

}

Grace is Varnish's implementation of HTTP's stale-while-revalidate mechanism. Very simply, this is the period of time Varnish can keep an expired object and still serve it if it has nothing better to offer. And since Varnish 4.0, grace is totally asynchronous, the backend fetch is happening in the background, taking advantage of the If-None-Match header to minimize bandwidth.

In the (extreme) VCL example above, the object gets a TTL of 0 seconds, meaning it's already expired, so Varnish will get a new version as soon as it's requested, but in the background. And at this point, you may wonder how that could be awesome: by removing the synchronousness we just rob ourselves of on-time updates, right? That's correct, but we gain something that MAY be more important: request coalescing.

For one request/URL Varnish will only trigger one background fetch at a time, allowing your backend to survive sudden surges of traffic, notably if requests take a long time.

Buuuuuuuuuuuut, you can object that if your object changes and you can't afford to deliver a single out-of-date request, that's not a viable solution. And again, you'd be right. I would however ask: can you really ever ensure this? The object may very well change on the backend right after the response is sent and before it is received. This is part of the weakness of HTTP, and we have to be aware of it.

With that said...

A man's gotta do what a man's gotta do

At the risk of being Horrible, I need to Hammer this point home: make sure this is your last resort, because you won't be taking the easy path.

We now arrive where Varnish shines: uncharted territory. Varnish is great because it's fast and efficient, but above all, it refuses to define policies. This is the whole idea behind the VCL: the user can have almost full control over what's going on, instead of being limited to the path chosen by the tool.

And that allows us to abuse the system and twist its arm to do our bidding. And we can do it with a pure VCL solution! But before we do that, let's have a brief reminder of what the VCL is and does (skip that one if you already know).

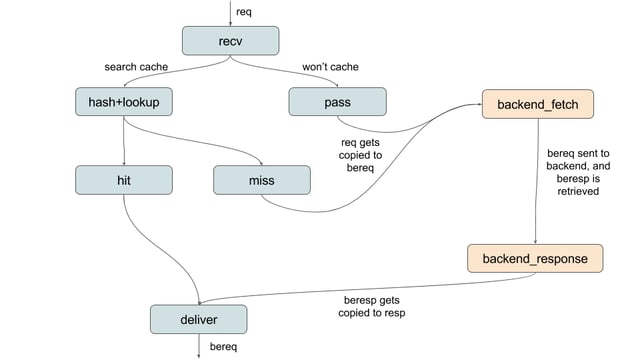

The Varnish Configuration Language basically maps to a state-machine processing requests from the moment they are received to the moment they are delivered. At each step, the VCL is called, asked what should be done (tweak headers, remove query strings, etc.), and what the next step is. A simple representation of the state-machine could be as shown in this flowchart:

The problem is, that doesn't really map with the flowchart we came up with the first time:

But, no worries, we'll make it work. To do so, we'll use restarts extensively, allowing us to go back to vcl_recv and start processing the request again without resetting it. We basically have two paths to cover:

But, no worries, we'll make it work. To do so, we'll use restarts extensively, allowing us to go back to vcl_recv and start processing the request again without resetting it. We basically have two paths to cover:

MISS, object is not in cache:

- proceed as usual, we'll fetch the object, put it in cache and deliver, it'll obviously be fresh. Yes, that was the easy one.

HIT, object is in cache:

- save the Etag.

- restart, because we need to go to the backend.

- pass, because we don't necessarilly want to put the object in cache.

- set the method to HEAD, because we don't care about the body, only the Etag header.

- if the backend replies with an Etag differing from the one we have, kill the object.

- restart.

- once there, just proceed as normal

The HIT path will look like this when mapped on the state-machine.

Note: we could use If-None-Math and test the status code (200 or 304) instead of comparing Etags, but we'd still want to use HEAD, to make sure only headers are sent.

And this is where things get a bit ugly. Since we'll be going through some steps multiple times, we have to keep track of what state we'll be in and route accordingly. And now, here's what you have been waiting for this whole post, the VCL:

sub vcl_recv {

if (req.restarts == 0) {

set req.http.x-state = "cache_check";

return (hash);

} else if (req.http.x-state == "backend_check") {

return (pass);

} else {

return (hash);

}

}

sub vcl_hit {

if (req.http.x-state == "cache_check") {

set req.http.x-state = "backend_check";

set req.http.etag = obj.http.etag;

return (restart);

} else {

return (deliver);

}

}

sub vcl_backend_fetch {

if (bereq.http.x-state == "backend_check") {

set bereq.method = "HEAD";

set bereq.http.method = "HEAD";

}

}

sub vcl_backend_response {

if (bereq.http.x-state == "backend_check") {

if (bereq.http.etag != beresp.http.etag) {

ban("obj.http.etag == " + bereq.http.etag);

}

}

}

sub vcl_deliver {

if (req.http.x-state == "backend_check") {

set req.http.x-state = "valid";

return (restart);

}

}

This is obviously a minimal version of it, and you will have to work a bit to adapt it into your own setup, as always with code using restarts. I also took a few shortcuts for the sake of clarity, such as banning only based on the Etag, or systematically returning, hence bypassing the built-in VCL code.

Faced with such a piece of code, your first instinct should be skepticism, "Does that even work?", you should ask. It does work, and I have the test case to prove it! Actually, I built the VCL directly in the VTC, making development super easy.

A matter of choice

Part of the appeal I find in Varnish is explained in this blog: every time a strange use case appears, my reptilian brain screams "NAAAAAAAAW, YOU SHOULDN'T DO THAT, THIS IS NOT THE WAY!!!!!", but then the dev neurons fire up, asking, "Yeah, but if I HAD TO, could I do it?". And pretty much invariably, the answer is, "Sure, just put some elbow grease into it, and that'll work".

Varnish is not a complex tool, but rather is composed of a lot of simple cogs, and once you know how they are articulated together, well, the weird requests become fun challenges.

Through this long post, we've seen how to answer the original question, but, I hope, we also expanded our horizons a bit by touching on quite an array of subjects, such as:

- VCL

- Varnish Administration Console

- Purging

- Objects in grace