In this post, I will explain how to create a highly available, self-routing sharded Varnish Cache cluster. This is similar to a standard sharded cluster with one exception, there is no dedicated routing tier. Each node in the cluster can route the request to the proper destination node, by itself.

From an architecture standpoint, having a self-routing cluster is an interesting proposition because it means no load balancer or application router is needed. All we need is DNS. Routing logic, which before had a dedicated piece of hardware or software, is now distributed across all participating nodes.

Self-routing architecture

In this example, we have three nodes, each with one-third of our content. This is a standard sharded architecture using the request URL as the shard key. The DNS name is:

- content.example.com

This DNS name does a simple A, AAAA, or even CNAME round robin across our three nodes, with the following hostnames:

- node1.example.com

- node2.example.com

- node3.example.com

The architecture will work as depicted in Figure 1 below:

Figure 1: Self-routing cluster using DNS round robin

To set up a self-routing cluster, we first need to define the cluster and content origin:

vcl 4.0;

backend node1 {

.host = "node1.example.com";

.port = "80";

}

backend node2 {

.host = "node2.example.com";

.port = "80";

}

backend node3 {

.host = "node3.example.com";

.port = "80";

}

backend content {

.host = "content-origin.example.com";

.port = "80";

}

Next, define a hash director for our cluster:

sub vcl_init

{

new cluster = directors.hash();

cluster.add_backend(node1, 1);

cluster.add_backend(node2, 1);

cluster.add_backend(node3, 1);

}

We leverage this director to do our content sharding by hashing on the URL and route the request to its destination:

sub vcl_recv

{

set req.backend_hint = cluster.backend(req.url);

set req.http.X-shard = req.backend_hint;

if (req.http.X-shard == server.identity) {

set req.backend_hint = content;

} else {

return(pass);

}

}

Above, we check if the hash director points to this node or to another node (lines 34 to 38). If it points to this node, we set the backend to our content server and Varnish will now go through its standard caching logic. If the hash director points to another node, we simply pass the request to that node. This logic will repeat on that node and the request will hash to itself, normal caching logic will happen, and the response will pass back through the originating node.

That's it, we now have a self-routing sharded cluster! The full VCL can be found here.

There are some considerations for the code above. First, we are using server.identity to identify the server against our backend list (line 34). Server.identity defaults to the hostname of the node. In some cases, the hostname may not match the VCL backend name, so you can change the value of server.identity by using the -i parameter when starting varnishd to match your VCL backends.

Also, we are using the hash director to do our shard routing. The hash director has known rehashing limitations when it comes to resizing your cluster or when an entire node is marked as down, which is another kind of resizing. Starting in Varnish Cache 5.0, a new and improved director has been added, the shard director, courtesy of our friends at UPLEX. The shard director addresses these limitations and basically gives you textbook consistent hashing.

Finally, we kept the architecture simple for example purposes. Probes and health checks have been omitted and backends are all single units and not highly available nested directors. More on this later.

Redirect routing configuration

There is another method of self-routing we can employ that accomplishes the same thing, but without the need to pass (or proxy) the request to the destination shard. This method uses standard HTTP redirects and this instructs the client to make a new connection directly to the appropriate destination shard.

Using the above VCL, we just need to alter vcl_recv to this:

sub vcl_recv

{

set req.backend_hint = cluster.backend(req.url);

set req.http.X-shard = req.backend_hint;

if (req.http.X-shard == server.identity &&

req.http.X-shard + ".example.com" == req.http.Host) {

set req.backend_hint = content;

} else {

set req.http.X-redir = "https://" + req.http.X-shard +

".example.com" + req.url;

return(synth(302, "Found"));

}

}

sub vcl_synth

{

if (resp.status == 302) {

set resp.http.Location = req.http.X-redir;

return (deliver);

}

}

Instead of doing a pass to the destination shard, we do an HTTP 302 redirect (lines 38 to 40). This means the client will create a new dedicated request directly to our intended destination. Using redirection means we do not have the overhead of passing the request through the originating node. This means less cluster overhead, but it also means your client will be doing an extra round trip. This is usually a positive trade off if you plan on transferring large amounts of content.

The full VCL can be found here.

Varnish Plus

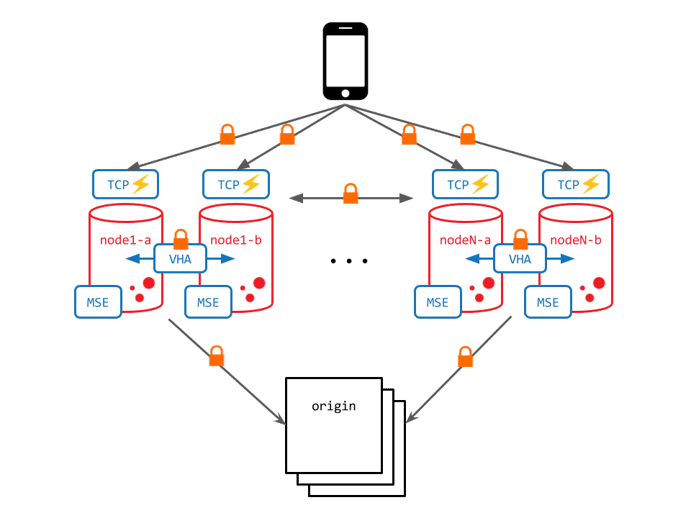

Varnish Plus can add tremendous value to this architecture. First, we can use VHA and add nodes to our shards which would act as additional traffic capacity without any extra overhead. This means we can now choose from a pool of servers for any single request. This not only maximizes performance, but it serves to gives us greater fault tolerance when we have unexpected downtime, including IP failover. Varnish Cache Plus also ships with DNS backend support, simplifying configuration, and VMODs for managing millions of custom shard routes.

We can also leverage Massive Storage Engine and scale the storage on each node to tens of terabytes with little to no performance impact. It will also keep its cache intact across restarts and reboots. We can follow best security practices and enable end to end TLS on all traffic. Finally, since clients are connecting directly to this server, we can leverage TCP Acceleration and optimize connections for ultra low latencies.

Figure 2: Self-routing cluster with VHA, TLS, MSE, and TCP Acceleration

If you are looking for enhanced security, greater architectural control, or to scale up your content delivery while controlling costs, then Varnish Extend is the complete enterprise-grade DIY private or hybrid CDN solution.

In conclusion

This post highlights just a few ways we can scale content caching while keeping our architecture simple and optimizing for availability and performance. The key here is VCL. By having the ability to express our architecture using simple logic and rules, architectures which were once considered out of reach can now become standard and these now standard architectures can be easily modified to match your exact requirements!

Ready to try Varnish Plus?

Photo (c) 2006 Andrew Hart used under Creative Commons license.