The cache hit rate is the percentage of requests that result in cache hits. In Varnish it is evaluated as cache_hit / (cache_hit + cache_miss). The higher the hit rate the more effective your cache is. For example if the hit rate is above 80%, it means the majority of requests are fulfilled by an object already present in cache and no backend request is made. This, of course, makes the response time shorter and reduces or completely avoids the chance that your backend will be overwhelmed.

Varnish provides several tools that can be used to monitor the cache hit ratio and help to understand how Varnish decides if and how to cache a page.

Varnishstat:

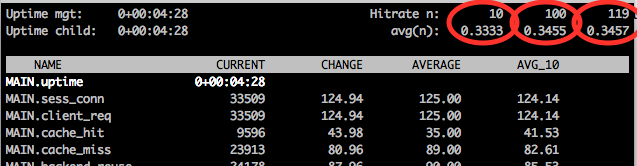

While running varnishstat your cache hit rate is available on the upper right corner (circled in red in the figure).

To be precise, there are three different hit rate averages evaluated at intervals of 10, 100 and 1000 seconds.

Starting from the first red circle on the left:

- Hitrate n: 10 and avg(n) 0.3333 means that during the last 10 seconds the hit rate average was 33.33%

- Hitrate n:100 and avg(n) 0.3455: during the last 100 seconds the hit rate average was 34.55%

- Hitrate n: 119(this will go up to 1000) and avg(n) 0.3457: during the last 119 seconds the hit rate average was 34.57%

Remember that in the hit rate calculation “pass” requests are not considered misses, so your hit ratio can be 100%, but you will still see backend requests.

Varnishtop:

This tool presents a continuously updated list of the most commonly occurring log entries. ‘varnishtop -i BereqURL’ shows you the URLs most requested to the backend.

Varnishlog:

Once you are aware of which URLs hit the backend frequently, you can use this tool to get a complete overview of the request you want to analyze. ‘varnishlog -q ‘BereqURL ~ “/foo”’ shows you the complete transaction matching the specified regular expression.

Combining these three tools can help you understand why Varnish is caching some pages and not others and if your cache policy is acting as you would expect and want it to do.

Some prudence should be used when developing your VCL and tuning Varnish:

- Memory: if the rate of nuked object increases (n_lru_nuked in varnishstat) it means Varnish has to evict objects from the cache to fit new objects. This happens when your data set does not fit in the configured storage size. Nuking objects increments the number of misses, which has a negative effect on the hit rate. Consider adding more space to the storage available in Varnish to get more objects in cache and therefore achieve a better hit rate.

- Cookie: For security reasons, Varnish by default doesn’t cache objects when a cookie or a set-cookie header is set. Specifically, if a client sends a cookie header, this request will be “pass(ed)” to the backend. For many web applications, cookie headers are not strictly necessary and they can be unset and the pages can be cached as any other object. This helps to have fewer backend request and more hits on the objects in cache.

- Normalize: when a backend issues a vary header it tells Varnish that different versions of the same objects need to be cached based on the client requests.

For example, “Vary: Accept-Language” means that Varnish must cache variants of the same objects based on the client Accept-Language header. Varnish will create two different objects for “en-uk,en-us” and “en-uk, en-us” because of the presence of the whitespace in the second Accept-Language.

Normalize headers whenever possible, which is crucial to achieving a high hit rate. Here you can see a VCL snippet that normalizes the “Accept-Language” header:

if (req.http.Accept-Language ~ "en") {

set req.http.Accept-Language = "en";

} elsif (req.http.Accept-Language ~ "de") {

set req.http.Accept-Language = "de";

} elsif (req.http.Accept-Language ~ "fr") {

set req.http.Accept-Language = "fr";

} else {

# unknown language. Remove the accept-language header and

# use the backend default.

unset req.http.Accept-Language

}

}

N.B. the same logic applies to any Vary header.

- TTL and cache invalidation: set proper TTLs based on the nature of the objects you are caching and have a way to invalidate content. High TTLs can help you to speed up your website, but without a good cache invalidation policy you would not be able to free the cache when it is required (usually when the contents change), increasing the miss rate.

Are you ready to learn more about monitoring your cache hit rate and other Varnish tools?

Photo (c) 2014 by Filipe Ramos used under Creative Commons license.