There are quite a few tunables in the Linux kernel. Reading the documentation it is clear that quite a few of them could have an impact on how Varnish performs. One that caught my attention is dirty_background_writeback tunable. It allows you to set a limit for how much of the page cache would be dirty, i.e. contain data not yet written to disk, before the kernel will start writing it out.

In addition to this watermark setting are also timers at work timing the age of each page. The default is 50 seconds, so the kernel will, no matter the dirty_background_writeback ratio start writing a page out after 50 seconds of age.

Since Varnish, running with MSE or the file backend will rely on the kernel to control when the writeback happens you can imagine this has an impact on performance. So I decided to give it a test. I used the existing test suite we created for MSE and let it run through some iterations.

In addition to dirty_background_writeback there is another tunable; dirty_ratio. When the pressure on the VM increases enough it will start to block the application when it writes, effectively switching from asynchronous writeback to synchronous writes. I believe it is beneficial for Varnish to lift this from the default 20% up to 90%.

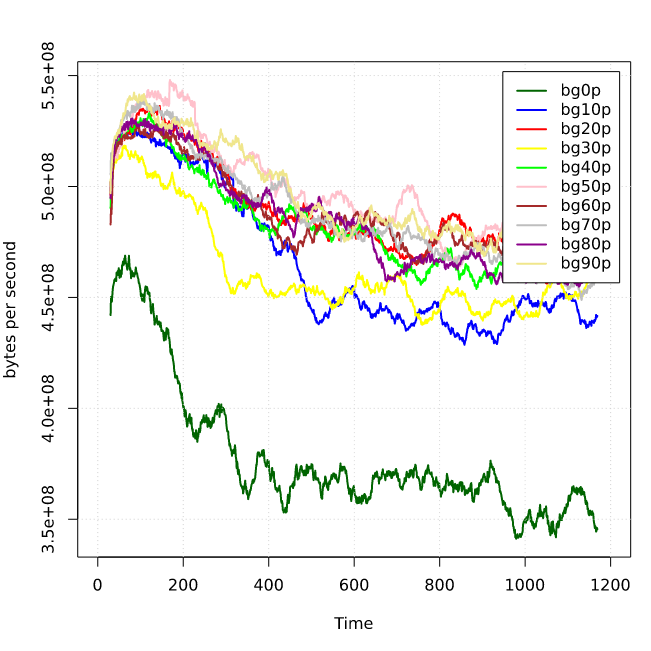

So I ran ten tests, with dirty_backgroun_writeback varying from 0 to 90 and measured throughput.

bg0 is the graph where dirty_background_ratio is set to 0. So, the kernel should immediately start pushing stuff to disk. When speculating I thought this would be more or less ideal as the kernel wouldn't need to wait for enough data to be dirty to start pushing the disks. However, the increased IO are likely to hamper read performance, which is pretty important here. So, there is obviously a big win for the kernel to be given a bit more time to coalesce some IO.

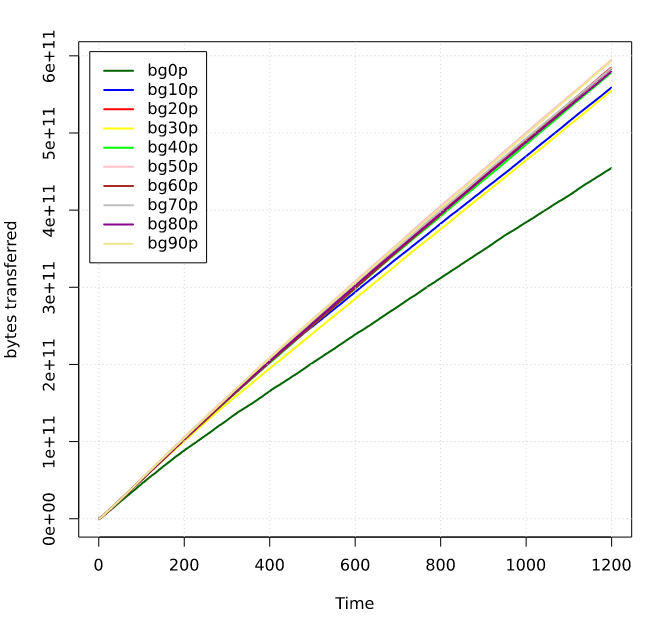

The rest of the graph is a bit hard to read. So, we if we look at the integral of throughput we'll see much clearer how throughput is over time.

As you can see the tan line is the one showing the highest throughput - this has the writeback ratio set at 50%. Explaining this is hard, but my guess is that this balances the coalescing reasonably well with the workload we have here. When it goes above 50%, there isn't enough headroom available and the kernel quickly gets caught being forced to write synchronously.

What can we learn from this?

Looking at the last graph, we see there are significant benefits from tuning the writeback ratio. The exact value will probably vary from installation to installation. I'm speculating that a workload with a higher cache hit ratio might benefit from having a higher writeback ratio than a site where Varnish writes more to disk.

What do you want me to test next?

I have a system that is ready to go. If you would like me to test a certain tunable, please let me know in the comments.