This year we’ve seen quite an interesting announcement from Intel. Their Haswell processors are now on sale. Usually we haven’t cared much about what Intel releases as new features in CPUs don’t always impact the performance on server software that much. Haswell, on the other hand, now has a feature, Transactional memory, that is pretty cool and might actually influence Varnish's performance quite a bit. Here is why.

As most multithreaded programs Varnish uses a fair bit of locks to synchronize access to it’s various internal organs. Good examples are the global counters. Varnish has quite a few of them. However, updating the counters is a somewhat fragile operation. If there are two different threads that write to the same counter, one of the updates might be lost, something we see from time to time.

To mitigate this we can use locks around the statements that update the counters. However, these locks are expensive and we’ve been hesitant to place locks around all the counters just to make sure they are accurate. Performance over accurate statistics, right?

The costs of locks

Placing a lock has at least three significant costs:

-

It creates a “memory barrier”. CPU are usually free to shuffle around the stream of instructions that flow through them and it is done all the time as the CPU constantly optimizes the instruction stream to keep all its resources busy. A lock creates a barrier and the CPU is not allowed to move stuff past this barrier. Branch prediction across memory barriers is also something I don’t think is doable.

-

Cache coherency. The address line that stores the lock gets invalidated and the content in that cache gets thrown out. This will surely result in at least one cache miss and the CPU has to get data from memory, which is a drag.

-

Waiting. There is lots of waiting involved. A lot of the time you need to acquire a lock to read a memory structure to make sure that no one changes it while we’re reading it. Or the thread doesn’t need to update the structure behind the lock and it has kept all the other threads waiting for the lock in vain.

Locks with Haswell

So, how does Haswell change this? Instead of using a mandatory lock it will use what we can call speculative lock elision or optimistic locking. The short version is “just go ahead without a lock and fix it if it breaks”. This actually isn’t that far from what is actually going on. This is how a Haswell CPU will handle a conflicting update of a counter with a lock:

-

Thread A takes the lock. Haswell will not erect barriers however so the CPU runs at full steam.

-

Thread B takes the lock. We now have a “soft” lock conflict. The CPU knows this and is recording changes the thread does to memory.

-

Thread A makes an update and releases the lock.

Now stuff gets interesting. We have two scenarios going forward:

1. Thread B releases the lock without any changes.

Excellent. Nothing happens. In retrospect we now know the lock was never needed. However, what if thread B updates the counter? This gets tricky.

2. Thread B makes an update.

Now the TSM kicks into gear. It undoes whatever memory alternations thread B has done since it got the lock and sets the instruction pointer back to where thread B was about to take the lock. SInce the lock is no longer contended thread B. That is some pretty brilliant silicon.

Since the lock is not contended anymore it should be straightforward once the thread has been granted the lock. It will run through the same code again. There are certain limits on what the CPU can do while it is tracking these threads. It obviously can’t interact with hardware and there are limits on how much memory it can touch before it runs out off this magic buffer space. If it runs out of buffer tried to meddle with the hardware a rollback is issued.

So, this is pretty awesome. If it works as announced we can more or less add locks all over the place without having to worry about performance most of the time. Certain locks that protect lots of updates might still have an impact, but a lot of the locks will not impact performance significantly.

Varnish Cache + Lock Elision

I think Varnish Cache is more or less an ideal use case for Lock Elision. This is due to a couple of factors.

- Varnish Cache is heavily multithreaded and can operate on tens of thousands of transactions per second.

- Varnish Cache mostly operates on memory. Databases, on the other hand, do a fair bit of disk IO, and the performance costs of a memory lock when dealing with significant amounts of disk IO is more or less negligible since disks are slow as molasses compared to memory.

If anyone from Intel reads this and would like us to do a followup with some performance numbers we'd be happy to oblige. We would obviously need access to a Haswell system to do so.

If you want to learn more about Transactional Memory there are quite a few good sources available:

- Ars has a good introduction to Transactional Memory

- Transactional Memory 101, an excellent blog that goes deep into TSM. Especially the post aboutLock Elision.

- Linux Weekly News - Lock elision in the GNU C library

- Add Lock Elision to the Linux Kernel (PDF), Intel presentation from the Linux Plumbers Conference

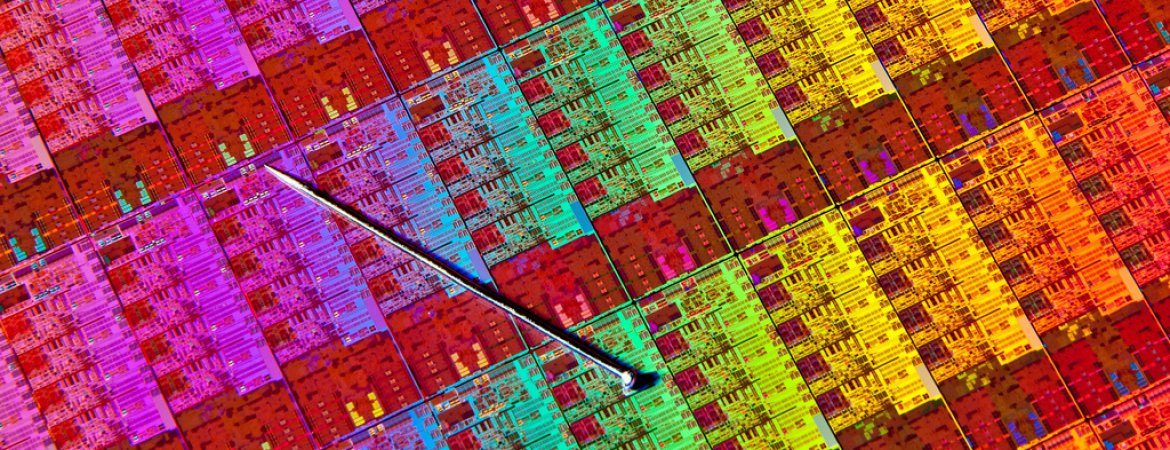

Image is (c) Intel 2012 and used under CC license.