Varnish is first and foremost known as an HTTP web accelerator, or an HTTP cache. One question we often get is: “Why should I use Varnish for live video streaming when there are other alternatives out there? What’s the value of Varnish?”

And the bottom line is: Varnish will distribute and deliver your content faster and contribute to architectural efficiency. We’ve highlighted three key features which make this possible, and which we think demonstrate the value of Varnish solutions, and which distinguish our approach from that of others in the market.

How does video get packaged for the web?

There are several standards for HTTP video streaming: HLS and DASH being the most common. Audio, video and captions are divided into smaller segments for efficient delivery.

One or multiple playlist files are used to index the segments and to allow a compatible video player to turn the segments into fluent audio and video. A playlist can support multiple bitrates depending on the client bandwidth that the player detects.

HLS and DASH have different container formats and different playlist formats. This results in duplicate video files when you want to support both formats for your live stream.

CMAF, on the other hand, can expose both HLS and DASH playlists without video duplication. To achieve this, CMAF uses a container format that is supported by both HLS and DASH.

Example environment

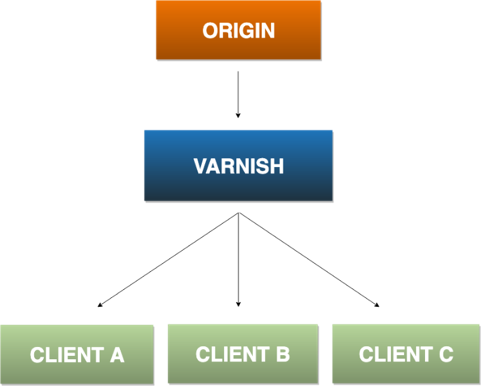

In order to illustrate the impact of the various features, we use a simple environment with an origin, one Varnish instance and three clients consuming one simulated live video stream.

The current diagram illustrates our example environment for video distribution, with "n" number of clients consuming a live video channel via Varnish. Varnish fetches the video segments from a video origin.

For the sake of simplicity, the origin in our example environment is a web service delivering chunk encoded responses to simulate a CMAF stream. Each response contains 10 chunks, and each chunk is delivered to the client in 200ms intervals. Each response is delivered in approximately two seconds (10 (chunks) * 200 (ms intervals) is approximately 2000 (ms)).

In the real world, this corresponds to a video origin taking two seconds to deliver a video segment that has a total duration of two seconds, delivered to the client as 10 chunks. This lets the origin start serving the segment before it is completely finished, which enables the client to start playing the segment earlier.

The following screen recording shows how our web service responds to two client requests:

Feature number 1: Streaming delivery

The term ‘streaming’ implies that the client processes and receives data as it comes in, without waiting for the full payload to be transferred.

In order for Varnish to accelerate streaming delivery for video, it too needs to transfer the response to the client as soon as the first bytes are received. This reduces latency significantly and is a requirement for low-latency CMAF.

The following screen recording shows how delivery is affected by introducing Varnish between the client and the origin.

The screen recording is almost identical to the client → origin scenario, which shows that Varnish adds minimal latency to the delivery, and that each chunk is delivered to the client as soon as Varnish receives it from the origin. Varnish has supported streaming delivery by default since 2014 when version Varnish 4.0 was released.

If streaming delivery is disabled or not supported, the result looks like this:

The response from the origin is now fetched completely by the proxy server before it starts delivering its response to the client. This will cause the client to wait for two seconds before it starts receiving bytes, and then it receives all the bytes at once.

Note that the throughput over the two seconds is identical, and not affected by the ability to stream.

Feature number 2: Request coalescing

Live video streaming follows a typical and recognizable traffic pattern. The majority of consumers will repeatedly, and at the same time, fetch the most recent video segment in the stream. The size of the hot data set is relatively small, particularly when compared to streaming video on-demand.

Request coalescing comes into play if two or more clients request the same video segment at the same time. Request coalescing will amalgamate similar client requests so that the origin will only see one request for each video segment. This is crucial to avoid overloading the origin server.

The screen recording shows two clients requesting the same video segment at almost the same time. The client #1 request is in-flight while client #2 requests the same video segment.

The response headers show that client #1’s request is a cache miss while client #2’s request is a cache hit. The origin sent only one response. Both clients receive the response immediately..

Note that the overall time to download the segment is considerably shorter for client #2, since the first few chunks of the video segment were already in cache at the time client #2 sent the request.

Feature number 3: Caching

Video distribution is about distributing content to a large number of clients, potentially in different geographical locations and using streaming via different networks). This typically requires a lot of bandwidth, which is handled by scaling out Varnish horizontally and in multiple tiers to achieve the required total capacity to deliver reliable streams to all clients.

The screen recording below shows a simulation of a number of concurrent clients requesting the same video segment over and over again in a simulated test lasting for five seconds.

Over the five-second duration of the client simulation, the origin serves the video segment once to Varnish, and Varnish serves the video segment 324 times to the clients. This is a cache hit ratio of 99.7%.

Varnish caching makes it possible to deliver content to more clients without increasing the load on the origin.

Summary

The features that are highlighted in this blog post (streaming delivery, request coalescing and caching) are all built into the core of Varnish, and are all enabled by default. They work together out of the box to enable highly efficient HTTP-based video distribution, with a combination of high throughput and low latency.

Throughput is often the only metric that is considered when comparing content distribution solutions.

Throughput is a typical performance metric, but does not necessarily reflect the latency of the delivery.

When your video platform has to deal with thousands of simultaneous connections, a robust, reliable scalability plan will ensure your throughput metric is maintained.

Varnish works to ensure your throughput is guaranteed under heavy load, without having to invest in a massive infrastructure setup.