The Time to First Byte (TTFB) is the amount of time between sending a request to a server and receiving the first byte of data.

This metric is critical in the world of web acceleration and content delivery because a high TTFB often implies latency because of high load on the server that produces the requested content.

Varnish’s main purpose is actually to keep the TTFB to a minimum by caching requested content and by positioning Varnish servers close to the target audience.

Time to First Byte makeup

The Time to First Byte is a lot more than the amount of time we wait for the server to respond. The timer starts as soon as the web client sends the request.

Because we operate in a web context, the HTTP protocol gets all the attention. But HTTP is merely what sits on top of the network stack at layer 7. The protocols below also play their part in the makeup of the TTFB: DNS, TCP, IP and TLS to name a few.

- The requested URL (https://www.varnish-software.com/ for example) contains a hostname. This hostname, www.varnish-software.com in our case, must be resolved to an IP address.

- The IP protocol handles routing to the resolved IP address

- Once the server has been located, a TCP connection has to be set up on the right port. For web content that’s either port 80 for HTTP and port 443 for HTTPS

- Once the connection is established, TLS handshaking has to take place if an HTTPS request is made

- And finally, the HTTP payload can be sent to the server

If any of the steps that precede the HTTP request are slow, your TTFB will increase.

|

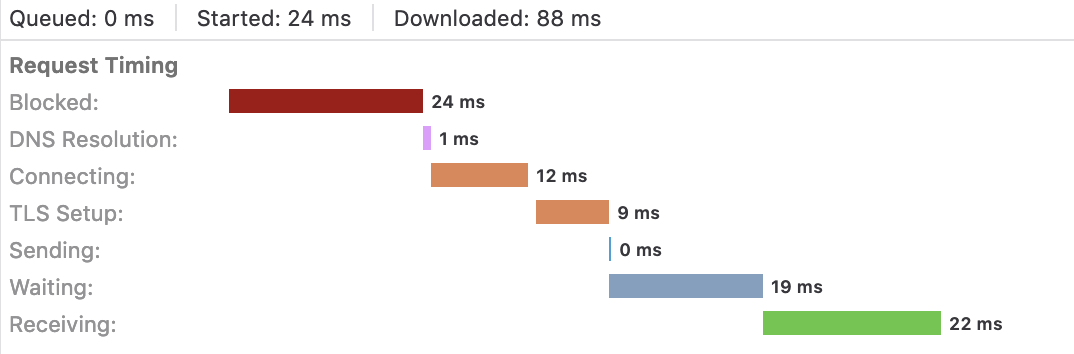

Your web browser has built-in tools that capture the various metrics. By right-clicking on a website and opening up the inspector, you are able to track all the requests and see the loading times. This screenshot shows the TTFB as well as the various elements that comprise the TTFB.

This example took 88 milliseconds to load. Here’s the breakdown:

|

Server-side processing as the main culprit for a high TTFB

In most cases, an overloaded web server or web application is the culprit for a high TTFB.

Web servers have their limitations. Based on the available server resources, concurrency thresholds are enforced through configuration parameters. These parameters control the number of available processes/threads or the number of open connections to the webserver.

If this threshold is reached, new connections can be queued or dropped, which has a significant impact on the TTFB and the quality of experience.

And this may sound surprising, but hitting the limits of your web server is not always related to a lack of server resources. Quite often it is because the default settings are used, which may be too conservative.

When we take a closer look at server-side processing and the effects on the TTFB, the web server is merely the gateway between the world wide web and the application. The application itself usually has a much bigger impact on loading times.

Web applications can be slow regardless of the traffic patterns. But fast web applications can slow down when the load increases. This is where we transition from a performance problem to a scalability problem.

Poorly architected applications usually suffer from the same symptoms:

- Saturated CPUs because of inefficient application logic

- Not enough consideration for efficient memory usage in the code

- Too much filesystem access

- Poorly optimized database designs

- Poorly optimized data access strategies

- Too many API calls to slow external services

This can cause the server itself to have a very high load, or it can cause web server timeouts to be exceeded. Either of which has a detrimental effect on the Time To First Byte.

Network latency as the next bottleneck

![]() Overloading a server is quite common when operating at scale. This is not only mitigated by optimizations within the application code, but also by a horizontal scalability strategy that spreads the load across multiple web and application servers.

Overloading a server is quite common when operating at scale. This is not only mitigated by optimizations within the application code, but also by a horizontal scalability strategy that spreads the load across multiple web and application servers.

But time and again we are faced with an inevitability in IT: taking away a bottleneck may uncover the next bottleneck. And that next bottleneck is the network.

When you operate at a large scale with a lot of computing power your infrastructure may be very powerful and stable, but your end-user may not always perceive it that way.

Network latency and network saturation also affect the TTFB.

On the one hand, having powerful network appliances and good peering capabilities can ensure enough bandwidth. However, bandwidth means nothing if the latency is high.

High network latency is not solely caused by a lack of resources or poor configurations, it can also be caused by the physical distance between the end-user and the web platform.

Fiber optic cables operate at the speed of light and when the client is located on the other side of the globe, geography can also be a source of network latency.

Lowering TTFBs with Varnish

![]() Varnish is an essential part of your toolkit when it comes to lowering the Time to First Byte.

Varnish is an essential part of your toolkit when it comes to lowering the Time to First Byte.

The fact that Varnish efficiently caches HTTP responses that otherwise may consume a lot of server resources to compute is the most important contributing factor.

Varnish is designed to be fast under the most demanding traffic patterns and by putting Varnish in front of your web platform, the TTFB can be dropped to mere milliseconds.

For web and API output, the content length usually isn’t a big factor: storing the content in the cache and defining a good caching strategy using Varnish’s built-in VCL programming language is the priority. This happens to be the original use case of Varnish.

Varnish will not only offload the origin web server, it can also enhance the way web pages are presented. By compressing content through Gzip or Brotli, the number of bytes over the wire can be reduced.

And by computing certain results “on the edge” using the Varnish Configuration Language and the rich ecosystem of Varnish modules, otherwise, uncacheable data can now be directly served from Varnish with sub-millisecond latency.

But Varnish can also play a big part in reducing the TTFB for content with a larger payload size. Images are a good example of this. Serving images from a web server doesn’t require a lot of computing power, but the time it takes to send large images over the wire will impact your TTFB.

By strategically positioning Varnish servers in key locations, network latency can be reduced, which reduces the TTFB.

But by reducing the size of these images in Varnish, less data is transmitted over the network and that also improves the TTFB. Varnish Enterprise’s Image module is capable of converting traditional image formats such as JPEG and PNG into WebP, a modern image format designed for efficiency.

TTFB is just one of the Core Web Vitals

Time To First Byte is one of the key metrics of web performance, but there are other factors and metrics that determine the total amount of time it takes to display a website. These metrics are often referred to as “Core Web Vitals; look out for another blog on this topic very soon.