Quite a while back, I wrote the first part of this blog series about the challenges of cache invalidation with the dispatcher in Adobe Experience Manager 6.0 and Adobe CQ 5.x in any Linux platform (Adobe supports Red Hat running a Linux Kernel 2.6 and 3.x). So the context should be clear. But how do you actually go about replacing it?

Simply put: A replacement of the dispatcher for caching can be done provided that the Varnish VCL configuration mimics the behavior of the dispatcher, which is basically an Apache module, making use of the same CQ-handle headers to invalidate, but site-specific knowledge of the layout must be used to limit the trees of invalidation more aggressively.

Added to that you get lots of added value with Varnish Cache Plus, as it also includes several goodies:

- IP4 and IP6 Support

- Gzip compression support

- ESI supported so you can leverage personalization of your site

- ACL support where you can define /allowedClients

- Varnish Custom Statistics (VCS) provides a high level of granularity across your cluster for all /statistics

- World-class logging facilities for consumption in json or ncsa format

- VCL - Descriptive subdomain language that allows you to define the logic of the delivery path for every single request

- Can mimic the logic in the Dispatcher Configuration Files

- Can invalidate by folder level or by any defined tag

- Can define dispatcher Instance /name and /farms

- Can define and identify Virtual Hosts - /virtualhosts

- Can specifying the HTTP Headers to Pass Through aka /clientheaders

- Define /sessionmanagement /renders /filters

- Can act as the dispatcher’s /cache

- Can cache requests with querystrings, or ignore them.

- Can “save” the request with the use of capabilities such as health-checks, retries, failovers, re-route, serve stalled content.

- Multiple caches with content automatically replicated with Varnish High Availability.

Do it using bans

Now to the nitty-gritty of how and why. We’ll take a look first at using bans to invalidate the content. As we will see later, It is more efficient to purge the content as it then it will get evicted from the cache at once, but for now we will keep ourselves to bans.

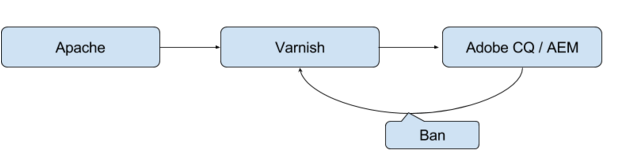

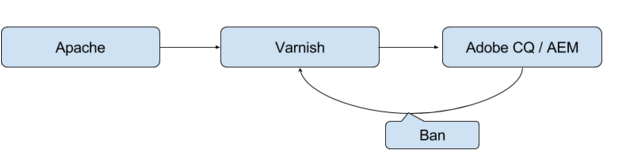

System description

The system we will describe here consists of different nodes, where each node consists of an Apache, a Varnish and a CQ instance. They all share a single VM. This is due to security policies preventing non-encrypted traffic between servers.

If you cannot have all software in the same VM as in this case, you can use Varnish Cache Plus with its built-in SSL/TLS capabilities for handling both the request in front (normally a client) and behind (the origin backend) the Varnish instances as it has SSL/TLS termination thanks to our Hitch TLS Proxy as well as Varnish SSL/TLS to origin support.

Apache receives the requests, and performs SSL termination and some forward rewrite rules. There is also an SSO module present in Apache. This will add a number of headers related to the logged-in person on every request.

Varnish caches all but a select few paths. It removes cookies and the SSO headers on receive. There is ban handling as RESTful service implemented in the VCL (and obj.http.x-url matching). Cache TTL is set high on all cached content (i.e. 30d). Invalidation is to be handled explicitly.

The CQ instance handles all invalidation. They are issued as bans on URLs that have been updated. There are regex's in Varnish VCL to match on a tree (non-end-anchored ban statement) of URLs for a specific updated URL. This to make sure navigation on sub-pages also get updated.

CQ comes with the ability to cache on an Apache as a set of static files. On updated paths CQ will call back over HTTP to that Apache module to delete trees of files (Using custom CQ-Handle header). This is in itself not necessarily enough in all instances, as the trees the module removes are too broad and there is no configuration flexibility to handle it. The Varnish VCL configuration mimics the behavior of this Apache module, making use of the same CQ-Handle headers to invalidate. But site-specific knowledge of the layout is used to limit the trees of invalidation more aggressively.

CQ is extremely slow, taking ~0.5 seconds per page generation. It also has broad locks on page generation, so simultaneous page generation becomes even slower.

All static content produced by CQ is unique, with an integer-based hash added to their names. This makes it unnecessary to invalidate static resources.

System configuration

In this scenario, the instance Varnish runs in is a very locked-down, Red Hat-based installation. The administrators do not have root access to the system, and only have access to run a predetermined set of scripts in order to control the Varnish instance.

In this setup, the Varnish binaries reside in a network-mounted, read-only file system. They are hand-compiled, and unfortunately in a strange way where the --prefix configure options were not specified correctly. So Varnish is unable to find its own paths, and all applicable runtime parameters have to be set in order to allow it to run. In a standard Varnish installation this should not be the case and is much more straight-forward.

The Varnish runtime files are also on a network-mounted file system (but with write permissions). They have been requested to change this, or at the very least be allowed to mount a tmpfs for the log file to live in.

Non-applicable system description

The nodes are also classified as publisher/presenter nodes. Publisher nodes are mostly uncached, and are used by content creators. The full setup consists of three publisher nodes, in a master/master replication setup.

The presenter nodes are targets only of the replication. Five presenter nodes are deployed, one in each geographical location data center.

The invalidation is handled locally on each presenter node, so all invalidation requests for each instance come locally from that node's CQ instance.

Further optimization

The setup described above relies solely on bans to invalidate content. Since CQ page generation is slow, deploying soft-bans to enable grace content during updates is a natural next step to optimize the setup.

In this example we use bans, but we recommend the extensive use of Surrogate Keys to build hashes for invalidation based on arbitrary headers, added to the AEM objects through Header tags, or specified Keys, as we did with bans. We have written before about how bans are powerful, but expensive.

If you are not using Varnish Cache Plus, for this specific change you can use the newly released Varnish Cache 4.1 with the Xkey VMOD which provides surrogate keys and additional hashtag capabilities for all objects in the cache, so that you can do “wildcard purging”.

That should get you going.

Learn more about using surrogate keys with the super fast purger. Contact us, write to me or request a Varnish Plus trial.

Image is (C) 2014 Stephen Donaghy used under Creative Commons license.