Looking at the different patterns, you chose the most popular one (it's even in the Varnish Book):

acl purge {

192.168.0.0/24;

127.0.0.1;

}

sub vcl_recv {

if (req.method == "PURGE") {

if (client.ip ~ purge) {

return (purge);

} else {

return (synth(403));

}

}

}

It's simple and clean:

- define the ACL

- check that the request method is "PURGE"

- if so, check the client IP against the ACL and either purge or send an error

It's practical too as it allows you to just send an HTTP request matching the object you want to kill and just change the method from GET to PURGE. Plus, the ACL ensures that any purge coming from outside of the local network (or from the machine itself) will be rejected, nice!

Since you know that testing is important, you check that a purge from inside the network, and from localhost works, and it does, cool.

But...

wait...

actually, all the purges are accepted!

Autopsy of a classic mistake

If that situation happened to you, you are not alone and together, we'll see what went on.

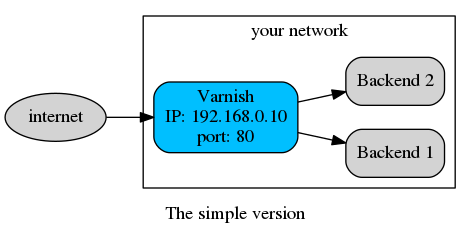

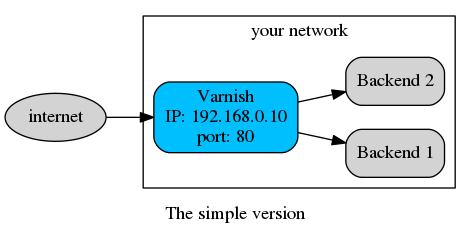

But first, let's look at your setup. Because, when people present Varnish, they often show a schema like this one:

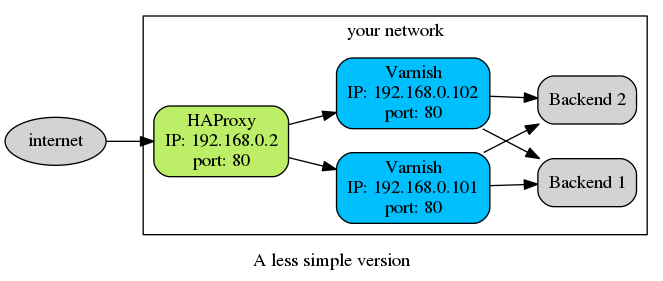

But that's only to explain the general idea. In real life, you may have opted for a level-7 loadbalancer to get consistent hashing (that's smart), so you are using HAProxy:

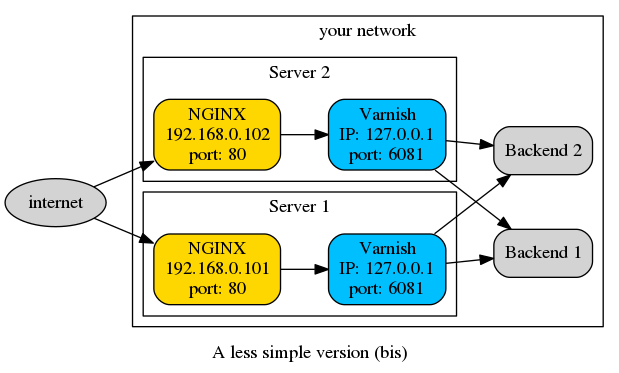

Or maybe you are using a level-4 loadbalancer to profit from DSR, but you decided to place NGINX in front of Varnish to terminate those https connections (don't worry, we've all done it).

What happened here? Very simply, in both case, Varnish is not the HTTP front-end of your setup, and HAProxy/NGINX is masking the real IP. The reason for this is that both will open a new TCP connection, so the requests Varnish sees are coming from them, not from the actual client. client.ip will be 192.168.0.2 in the first case, and 127.0.0.1 in the second, and both these IPs are valid according to the purge ACL.

Note that neither NGINX nor HAProxy are at fault here, it's just that they will talk to you through HTTP, so they are your clients, and Varnish recognizes them as such. In other words, everybody is doing what you asked for, it's just not what you wanted.

Surely, there's a solution?

More than one, actually. Depending on your technology and requirements, all may not be adequate, but at least one should fit your use case.Use the proxy protocol

A few years back, Willy Tarreau (HAProxy developer) created the proxy protocol, specifically to deal with this sort of issue. Essentially, the client will open a TCP connection and the first few bytes sent will describe:

- the protocol (PROXY)

- the IP version used (4 or 6)

- the client IP and port

- the server IP and port

And the good news is: Varnish supports it since 4.1! When listening to a proxied connection, the VCL will use the prefixed values for client.ip (server.ip). Your VCL doesn't have to change, but you need to edit your Varnish command line and change:

-a :6081into

-a :6081,PROXYPlus, you need to configure the server in front of you to talk to you with the proxy protocol. For HAProxy, you just add "send-proxy-v2" at the end of your server description, like so:

backend varnish

server varnish1 192.168.0.101:6081 send-proxy-v2

server varnish1 192.168.0.102:6081 send-proxy-v2

With NGINX, just add proxy_protocol on;:

stream {

server {

listen 80;

proxy_pass 127.0.0.1:6081;

proxy_protocol on;

}

}

Easy peasy, lemon squeazy. Note that NGINX and HAProxy are not the only ones knowing the proxy protocol, for example, you also have hitch that is a great https terminator with a tiny footprint.

Use your head...ers

However, the proxy protocol is not ubiquitous and you may not have access to it In this case, we can rely on good ol' HTTP and our trusty std vmod (included in the varnish distribution). The idea is simply to ask the server on the front to fill a header telling us the client IP, for example, it is done in NGINX with:proxy_set_header X-Real-IP $remote_addr;We could obviously use the X-Forwarded-For header, but for this example, I prefer to deal with a simple IP instead of a string of them. So the XFF header is left as an exercise to the reader (but that can be discussed in the comments if need be).

Now, all we have to do is to use this header in our VCL. Alas, the header value is a string, and you can only match you ACL with an IP. That's where the std vmod comes into play: using std.ip() we can convert a string into an IP, and set a fallback if the conversion fails. Our VCL would look like:

acl purge {

192.168.0.0/24;

127.0.0.1;

}

import std;

sub vcl_recv {

if (req.method == "PURGE") {

if (std.ip(req.http.x-real-ip, "0.0.0.0") ~ purge) {

return (purge);

} else {

return (synth(403));

}

}

}

varnishtest "Testing std.ip()"

# create a server so that -vcl+backend can create a valid vcl

# with one server, we won't use it though.

server s1 {}

# declare a varnish server with our VCL

varnish v1 -vcl+backend {

acl purge {

"192.168.0.0"/24;

"127.0.0.1";

}

import std;

sub vcl_recv {

if (req.method == "PURGE") {

if (std.ip(req.http.x-real-ip, "0.0.0.0") ~ purge) {

return (purge);

} else {

return (synth(403));

}

}

}

} -start

# simulate a client with one valid then one invalid X-Real-IP header,

# and check the response codes

client c1 {

txreq -req PURGE -hdr "x-real-ip: 192.168.0.14"

rxresp

expect resp.status == 200

txreq -req PURGE -hdr "x-real-ip: 1.1.1.1"

rxresp

expect resp.status == 403

} -run

Please note two things:

- you have to make sure that the front-end server adds the

X-Real-IPheader, else it could get spoofed. - if you purge without going throuh the proxy (from inside the LAN), you must add the

X-Real-IPin your purge requests.

Use another port

Lastly, there's the hardcore network admin's way: have Varnish listen on another port, firewall it well, and only allow purges on this port.

This is a good, sane solution as you move the "complexity" of managing the ACL down the network stack, and when you need to change said ACL, you can without touching the application layer.

Let's say you want to use port 6091 for purging, you need to add it to the command line:

-a :6091and in the VCL, test the receiving port:

import std;

sub vcl_recv {

if (req.method == "PURGE") {

if (std.port(server.ip) == 6091) {

return (purge);

} else {

return (synth(403));

}

}

}And voilà: no more ACL! The only other change to the VCL is the use of the std vmod to again convert the IP object, but this time into a port number.

A few words before we part ways

This whole post has been focused on purging assets, but really, it's all about IP-based access control.

Purge was used as example because it is something that everybody does, or should do, in one form or another, but you can do so much more, such as banning, updating vsthrottle limits, or even, with Varnish Plus, updating the ACLs themselves!

If you need another example, this is exactly the kind of mechanism Varnish High Availability uses to replicate your objects across a whole caching layer to give you enhanced reliability without pressuring the backends.

Obviously, we've only scratched the surface, but it should get you going pretty decently. And if you feel something was truly forgotten, tell us in the comments!

Photo (c) 2003 tableatny used under Creative Commons license.