As you might already know Varnish Cache Plus is a Varnish Plus product that is built on the top of the open source project Varnish Cache. Varnish Cache is usually a little ahead with regard to versioning because it may have some experimental features.

The software we sell (Varnish Cache Plus) must, as a commercial product, be stable, reliable and perform as expected; therefore, Varnish Cache Plus goes through a QA process and doesn’t always have the same features as the open source version, but it has other components that are not available in the regular Varnish Cache.

The latest Varnish Cache Plus version is the 4.1.x series, to be more precise the most up-to-date and recommended one is 4.1.4r5, which was released on December 13, 2016. It includes new features and the usual (the word “usual” makes it look simple and boring, but someone has spent time and effort on it) bug fixes.

It’s pretty important, given the changes that happen version to version, that you stay up to date on the major Varnish releases.

Here are the top five recent changes that you can interpret as the top five reasons to migrate to the latest version of Varnish post-haste:

- MSE2 with persistence has been introduced:

If you are running a big data set you probably already know about MSE (Massive Storage Engine) which is a storage engine specifically designed for big caches. Now, with MSE2, your cache (optionally) survives over each Varnish restart.

MSE2 was introduced in the very first Varnish Cache Plus 4.1, but improvements and bug fixes happened since then, so you should run Varnish Cache Plus 4.1.1r5. - Backend mode in varnishncsa:

The default mode of varnishncsa is "client mode". In this mode, the log will be similar to what a web server would produce in the absence of Varnish. Now, you can switch to "backend mode". In this mode, requests generated by Varnish to the backends will be logged.

It is possible to keep two varnishncsa instances running, one in backend mode, and one in client mode, logging to different files.

A HEAD request:

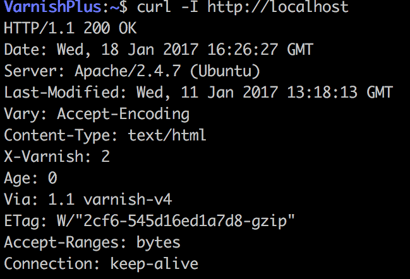

If we run varnishncsa with both client and backend mode with the default format we get something like:

You can change the log output format if you want to highlight some different metrics. “man varnishncsa” for more info.

127.0.0.1 - - [18/Jan/2017:16:26:27 +0000] "HEAD http://localhost/ HTTP/1.1" 200 3256 "-" "curl/7.35.0"

::1 - - [18/Jan/2017:16:26:27 +0000] "HEAD http://localhost/ HTTP/1.1" 200 0 "-" "curl/7.35.0" - Load balancing is better than ever: Varnish can distribute incoming traffic among different web servers, avoiding overwhelming a single backend.

The interesting bit here is that you can choose in which fashion you’d like your Varnish to distribute the traffic over the defined backends:

* round robin fashion

* a fallback director will try each of the defined backends and use the first one that is healthy

* the random director distributes load over the backends using a weighted random probability distribution

* the hash director chooses the web server by computing a hash of the string given to .backend() function.

* the least connection director: this is completely new in Varnish Cache Plus 4.1 and it will direct traffic to the backend with the least number of active connections. - Libvmod-goto: this VMOD deserves a special point in this list. Its scope is to provide a solution to the problem of creating throwaway backends. Backends created this way are cached for a configurable time after which they'll be discarded.

Upon creation, the given hostname is resolved into a list of IPs that will be

updated (or destroyed if unused) after each Time-To-Live period.

Example:

import goto;

sub vcl_backend_fetch {

if (bereq.url ~ "^/admin/") {

set bereq.backend = goto.backend("admin.example.com", "8080");

}

} - Parallel ESI:

Prior to this new feature, Varnish used to process one include at the time in a sequential way.

Now, with parallel ESI, Varnish is able to process more than a single ESI include per time. For example if you have 20 includes that need to be fetched, parallel ESI will fetch them all at once. This obviously leads to a significant performance gain.

Those were only five of the many improvements and new features you can find in Varnish Cache Plus 4.1.4r5. If you are still running any previous Varnish version, you should soon consider a migration to the latest, most stable and feature-complete release.

If you wish to receive more info you can get in touch with our sales team: sales@varnish-software.com.

If you need to migrate and think you might need more assistance, you can also look into our professional migration assessment and assistance offer.