Did you know that Hitch is now available as a container image on the dockerhub? I'm asking because it's time to revisit an blog post of mine (4 years, time flies!) and to streamline things a bit. Nowadays containers are ubiquitous, so we can skip the basics, and thanks to the dockerhub images, we don't even need to write our own Dockerfile like we used to. Ain't that nice?

Our base will look a lot like the previous setup, but for this specific blog post, we are going to focus almost exclusively on how to integrate Hitch with Varnish. As you will see it is very straightforward thanks to upstream work, but it will be an opportunity to explore a few interesting notions along the way.

We are once again going to use docker-compose to assemble our containers as it's a nice, self-contained tool, but the idea is directly applicable to larger setups like Kubernetes. We'll take it step-by-step, but if you just can't wait, the whole code is here.

Do one thing and do it in a container

While the Enterprise edition of Varnish offers built-in TLS termination, the open-source version doesn't so the project can focus on HTTP. It makes sense in light of the "Do one thing and do it well" UNIX philosophy since in the OSI model HTTP is at layer 7 and TLS is at layer 6 (or is it 5?), but we still need TLS capability. Indeed, HTTPS and HTTP/2 are being used pretty much everywhere, needing it, and we can't always rely on a load balancer to terminate TLS for us.

This is why we have Hitch! A small and feature-packed tool dedicated to handling encryption, Its job is very simple:

- listen for connections

- deal with the TLS handshake

- decrypt the incoming byte stream and pass it to Varnish (or whatever server you have behind it).

It supports OCSP stapling, TCP fast-open, mTLS, UDS and other fun stuff we won't need today, but more importantly it's lightweight and easy to configure. Like, very easy to configure, here's the complete config file we'll use:

# Listening address

frontend = "[*]:443";

# Upstream server address

backend = "[varnish]:8443"

# load all /etc/hitch/certs/*.pem files

pem-dir = "/etc/hitch/certs/"

pem-dir-glob = "*.pem"

# the protocols we care about

alpn-protos = "h2, http/1.1"

# numbers of processes to start, usually one per CPU

workers = 4

# run as the the hitch:hitch unprivileged user

user = "hitch"

group = "hitch"

# in a container, we stay in foreground mode

daemon = off

# use PROXY protocol v2

write-proxy-v2 = on

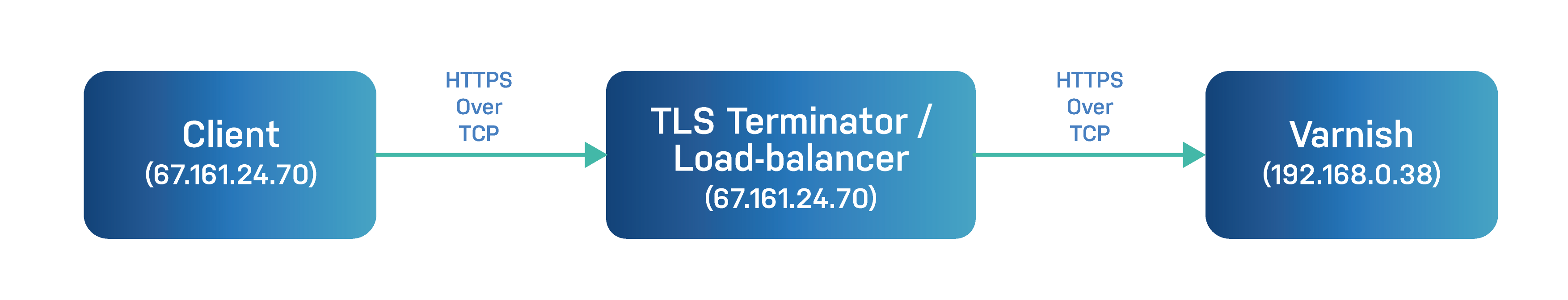

All options should be fairly easy to figure out, but I'd like to spend some time on one in particular: write-proxy-v2. But to explain this, let's explain the problem it solves first. The usual way to implement a TLS terminator looks like this:

The terminator accepts the client connection to receive HTTPS, opens a connection to Varnish and start pushing the HTTP traffic. It's usually all good unless your Varnish configuration does something like this:

# match all internal IPs and localhost

acl internal_acl {

"192.168.0.1"/24;

"127.0.0.1"

}

sub vcl_recv {

# deny /admin/* access to external IPs

if (req.url ~ "^/admin/" && client.ip !~ internal_acl) {

return (synth(403, "restricted area, sorry"));

}

}

The intent is pretty clear, but it overlooks an important detail: client.ip is not the IP of the user but of the terminator since it's the one that opened the connection to Varnish. That means everything going through the terminator will appear internal and will be trusted. Not good!

You could argue that if the terminator sets the (antiquated) X-forwarded-for header or the (new) Forwarded header, Varnish could parse it and could then rely on it. But there's a couple of issues with that:

- it's pretty cumbersome for one, and there are possibly a couple of edge cases for users using plain HTTP as those don't go through the terminator, and won't get tagged.

- more importantly, in our case, Hitch doesn't know anything about HTTP (no HTTP parsing == more speed), so it can't set any HTTP headers.

Instead, we can use the PROXY protocol; it's basically just TCP with a connection metadata preamble. In practice: when Hitch opens the connection, it will tell Varnish who the original client was, and Varnish can transparently use that information, meaning the VCL above will work. By setting write-proxy-v2 to on, we enable the PROXY protocol and make Hitch "invisible" to Varnish.

Note: the PROXY protocol comes from the HAProxy project and is supported by quite a few projects and platforms, so if you want to mix it up, go for it!

Certificates are wild(card)s

So, aside from some protocol digressions, the Hitch config is dead simple, but what about certificates?

Here, you will have to provide your own since they are tied to your domains, but the repository provides an example with a self-signed file: certs/testcert.pem (needless but mandatory warning: do NOT use it in production). It will be picked up by those lines in conf/hitch.conf:

# load all /etc/hitch/certs/*.pem files

pem-dir = "/etc/hitch/certs/"

pem-dir-glob = "*.pem"

i.e. you can dump all your pem files in conf/ and they will be used by Hitch. As a reminder, a pem file is the concatenation, in that order, of:

- the certificates from your trust chain, if any (often a .bundle extension)

- your domain certificate (usually ends with

.crt) - you private key (

.keymost of the time)

The last question is: how does the stuff in conf/ on your host end up in /etc/hitch/certs/ in the container? Very simple! The container definition in docker-compose.yaml looks like this:

hitch:

image: hitch

volumes:

- ${HITCH_CONF}:/etc/hitch/hitch.conf

- ${CERT_DIR}:/etc/hitch/certs/

ports:

- "${HTTPS_PORT}:443"

And the .env file defines the default values of the variables (but you can override them from the command line):

HTTPS_PORT=443

HITCH_CONF=./conf/hitch.conf

CERT_DIR=./certs/

No need to rebuild an image with the new configuration, it's just mounted from the host, easy!

No need to tell me twice once!

The Varnish side is pretty boring:

varnish:

image: varnish

volumes:

- workdir:/var/lib/varnish

- ${VARNISH_VCL}:/etc/varnish/default.vcl

ports:

- "${HTTP_PORT}:80"

We notably mount the VCL file so the container can use it and set a port, and while the workdir mount is intriguing (we'll see that in a later post), nothing betrays the Hitch integration, could it be magic?

Well, it's advanced technology for sure, but not exactly magic. Notice that we haven't specified a command? Without it, docker-compose will just use the default one baked into the container, which amounts to:

varnishd \

-F \

-f /etc/varnish/default.vcl \

-a http=:80,HTTP \

-a proxy=:8443,PROXY \

-p feature=+http2 \

-s malloc,$VARNISH_SIZE

and that's how Varnish understands Hitch:

-a proxy=:8443,PROXY

Mystery solved! This explicitly says we should have a socket:

- named "proxy"

- listening on port 8443, mirroring the backend definition in

hitch.conf - using the PROXY protocol, which matches the write-proxy-v2 option in Hitch

But, does it work?

It was a bit of a rhetorical question, but let's try it together. Assuming you have already installed git, docker and docker-compose, we'll clone the repository and enter the directory:

git clone https://github.com/varnish/toolbox.git

cd toolbox/docker-compose

For good measure, let's create a file in data, then start this party:

echo "Hurray!" > data/my_file

docker-compose up

If all goes well, you'll see some (colored) lines with each container happily logging its startup steps:

Starting docker-compose_varnish_1 ... done

Starting docker-compose_hitch_1 ... done

Starting docker-compose_varnishncsa_1 ... done

Starting docker-compose_origin_1 ... done

Starting docker-compose_varnishlog_1 ... done

Attaching to docker-compose_varnish_1, docker-compose_varnishncsa_1, docker-compose_varnishlog_1, docker-compose_origin_1, docker-compose_hitch_1

hitch_1 | 20210429T002926.537354 [ 1] {core} hitch 1.7.0 starting

hitch_1 | 20210429T002926.555737 [ 1] {core} Loading certificate pem files (1)

hitch_1 | 20210429T002926.559197 [ 7] {core} Process 0 online

hitch_1 | 20210429T002926.559488 [ 1] {core} hitch 1.7.0 initialization complete

hitch_1 | 20210429T002926.559608 [ 10] {core} Process 3 online

hitch_1 | 20210429T002926.560119 [ 9] {core} Process 2 online

hitch_1 | 20210429T002926.560789 [ 8] {core} Process 1 online

varnish_1 | Warnings:

varnish_1 | VCL compiled.

varnish_1 |

varnish_1 | Debug: Version: varnish-6.6.0 revision ef54768fc10f5b19556c7cf9866efc88cfbda8ff

varnish_1 | Debug: Platform: Linux,5.11.16-arch1-1,x86_64,-junix,-smalloc,-sdefault,-hcritbit

varnish_1 | Debug: Child (23) Started

varnish_1 | Info: Child (23) said Child starts

From another terminal, you can then try to fetch the file we created, using both HTTP and HTTPS:

$ curl localhost/my_file

Hurray!

# we need the -k (unsecure) for HTTPS as the certificates

# are self-signed

$ curl https://localhost/my_file -k

Hurray!

Hurray indeed!

And you should see the origin container print its access log in the first terminal too:

varnish_1 | Debug: Platform: Linux,5.11.16-arch1-1,x86_64,-junix,-smalloc,-sdefault,-hcritbit

varnish_1 | Debug: Child (23) Started

varnish_1 | Info: Child (23) said Child starts

origin_1 | 172.22.0.2 - - [29/Apr/2021 00:34:12] "GET /my_file HTTP/1.1" 200 -

And because Varnish is caching the request, there should only be one line, confirming that even though we fetched the file twice (or maybe more, if you are wild!), the origin only saw one request.

To be continued...

This was a brief but hopefully useful demonstration of how we can easily interface Varnish and Hitch with docker-compose, but it's really just the beginning:

- Hitch has tons of useful features that we haven't touched, notably mutual TLS for setups like extranets or zero-trust networks

- Any container-based platform can leverage what we saw here with minimal adjustment

- And if you are not using containers, you can use the Linux packages directly with little fuss as the default Hitch and Varnish configurations are also aligned to work out-of-the-box with each other.