GraphQL is a query language and server-side runtime for APIs, designed to give clients the specific data they need and make it easier to aggregate data from multiple sources. It aims to make APIs fast and flexible, and so is a common feature in the web stack of high traffic websites and e-commerce platforms. In these environments it’s often used to do things like fetch real-time price information and stock levels.

Why cache GraphQL?

As GraphQL queries are often in the critical request chain of a web page, it’s vital they get answered quickly. A slow GraphQL query can delay the loading of the rest of the page, affecting customer experience and Core Web Vitals metrics. At the same time, traffic spikes caused by large numbers of GraphQL queries can overload backend services, resulting in poor performance, downtime, or costly and inefficient CPU usage. As a system that receives many requests from clients for data that often resides in a database, it is a prime use case for caching!

As a powerful and flexible HTTP reverse proxy, Varnish can cache and accelerate the delivery of answers to GraphQL queries. Plus, using a few Varnish Modules (VMODs) it’s easy to integrate key features such as cache invalidation and persistence into your GraphQL caching setup.

How to cache GraphQL queries with Varnish

A GraphQL request can be either a GET request with a query parameter or a POST request with a request body. POST requests are by far the most common type, due to restraint on URL length. Varnish can cache POST requests and serve responses from cache with very low latency. Using the VMODs std and bodyaccess, Varnish can make the POST request body part of the cache signature of each request, ensuring each unique query is cached separately. This also means that whenever Varnish receives multiple identical GraphQL queries simultaneously, and does not already have the response in cache, the requests will be coalesced and only a single request makes it to origin.

Learn how to use Varnish to cache POST requests here.

Instant, targeted cache invalidation

GraphQL queries often request data that changes regularly, so a solid cache invalidation strategy is required to ensure data stored in cache is up to date. Varnish supports regular purge requests, but this can become impractical when invalidating larger sets of objects. This is where VMOD ykey comes in useful, enabling instant invalidation of all GraphQL query responses assigned a given key. These invalidations can be instantly executed across any number of Varnish servers with the help of our administration console Varnish Controller, so that data is kept consistent across your entire web service.

Indexing and parsing query responses

For more granular control of the keys assigned to each GraphQL query, the query response can be indexed on the fly by VMOD edgestash, and parsed by VMOD json. This gives complete control over the keys assigned to a given GraphQL query, based on the actual response. So you could, for example, invalidate every GraphQL response in your cache that contains a specific element.

Another module, VMOD xbody, enables reading of the GraphQL request body. When creating the cache signature for a GraphQL POST request, it can be useful to omit part of the query that is introducing variance without meaningfully changing the server response. VMOD xbody lets you pick out only the important parts of the incoming GraphQL query. You can go further and remove unneeded variances in the request body using VMOD xbody to achieve “normalization” of the cache key.

By mastering automatic cache invalidation, we can store queries with a long TTL, knowing that they will be invalidated and pre-warmed back into cache upon the next change in the backend. VMOD ykey paired with VMOD xbody allows you to add keys for specific GraphQL nodes, purge exact queries, and ban using regex to match on a request header.

Cache persistence and cache misses

With the Varnish Massive Storage Engine (MSE), Varnish Enterprise can cache very large amounts of data. By enabling MSE with disk persistence, we greatly increase the amount of content we can store in a single node. This makes caching the long tail much easier, and it means the cache survives after restarting Varnish or the server loses power.

Knowing when not to cache

If lots of unique GraphQL queries are sent only once or twice, and then never again, with VMOD utils backend misses you can avoid inserting these responses into cache at all. When marking an object as uncacheable, Varnish will insert a tiny Hit-For-Miss object into the cache. That tiny object remembers how many times it has been looked up, and this can be used to only cache a GraphQL query once it has been hit a certain number of times within a TTL period. This prevents the sheer number of different GraphQL queries from filling up your cache in the first place.

Using VMOD mse we can store all graphQL queries in a single MSE store with a pre-allocated amount of disk. This prevents overflow from causing premature evictions of other content like images and video segments.

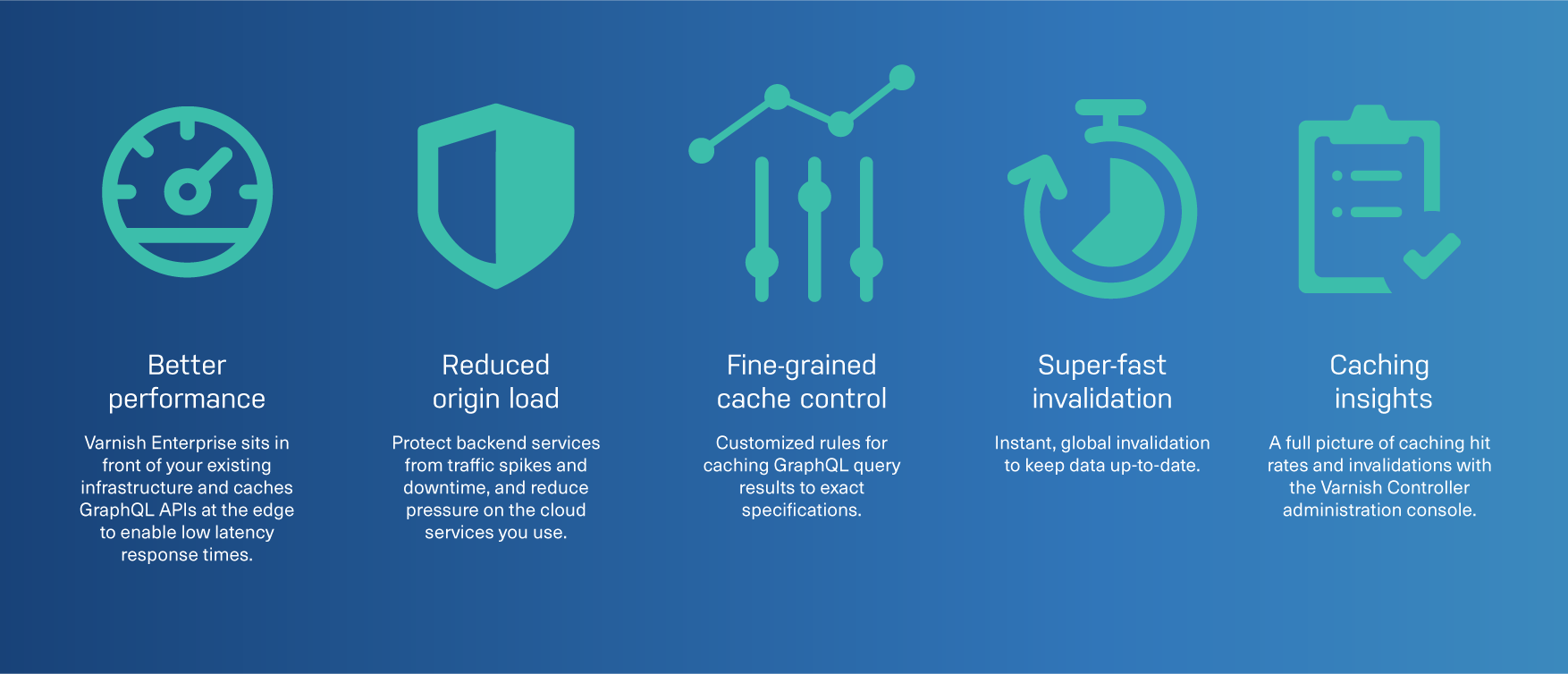

Five reasons to cache GraphQL queries and responses with Varnish Enterprise

1. Better performance - Varnish Enterprise sits in front of your existing infrastructure and caches GraphQL APIs at the edge to enable low latency response times.

2. Reduced origin load - Protect backend services from traffic spikes and downtime, and reduce pressure on the cloud services you use.

3. Fine-grained cache control - Customized rules for caching GraphQL query results to exact specifications.

4. Super-fast invalidation - Instant, global invalidation to keep data up-to-date

5. Caching insights - A full picture of caching hit rates and invalidations with the Varnish Controller administration console.