Following up on the test of dmcache we decided to scale it up a bit, to get better proportions between RAM, SSD and HDD. So we took the dataset and made it 10 times bigger. It is now somewhere around 30GB with an average object size of 800Kbyte. In addition we made the backing store for Varnish ten times biggers as well, increasing it from 2GB to 20GB. This way we retain the cache hit rate of around 84% but we change the internal cache hit rates significantly.

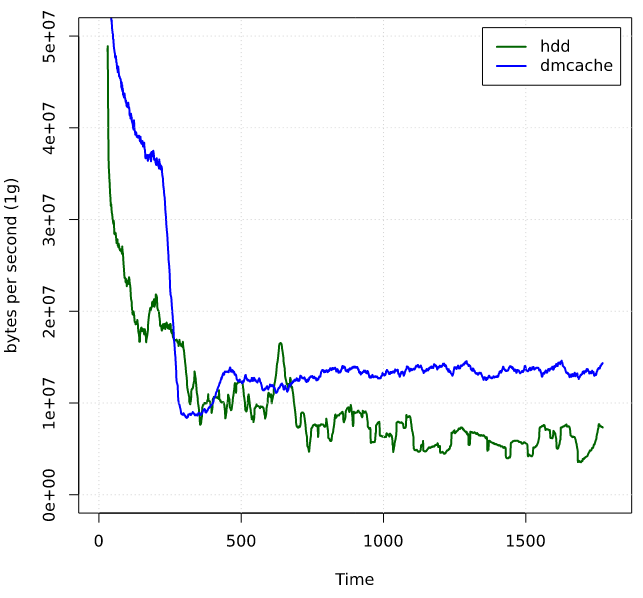

The results where pretty amazing and shows what a powerful addition dmcache can be for IO intensive workloads.

The flash device for dmcache is now 4GB, giving the flash capabilities to cache a significant capabilities to cache reads as well as writes.

We’re still running XFS and the server is still limited to 1GB of memory. This should give reasonable RAM/SSD/HDD-ratios - 1/4/20.

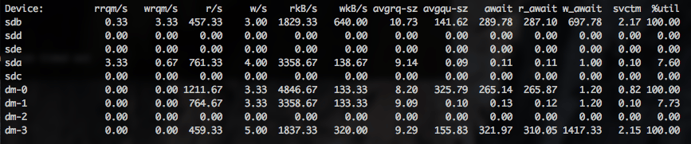

While running the tests I paid close attention to the IO characteristics of the server. iostat -x gives you a nice view of how the IO subsystem is performing. What impressed me was to see how many read requests the SSDs were actually doing. When you subtract the number of writes to the HDD (these would be the blocks migrated from the SSD to the HDD) the number of reads on the SSD are mostly cache hits from IO operations requested by Varnish. Seeing these figures being relatively high indicates the SSD is helping out with reads quite a bit. Of course, a few of them might be internal metadata requests as well.

In the screen shot sda is the flash drive I'm using. sdb is the hdd currently being tortured. dm-0 through 3 are the various devicemaps which are setup, you can ignore those here.

In addition there are of course many reads which are being served by the page cache. These are mostly for the really hot content, which gets pegged in memory.

When running the same tests with dmcache disabled the server was struggling hard. We had quite a few requests failing due to timeouts as the IO system very quickly became swamped, being asked to perform hundreds of IO-bounds requests in parallel.

As you can see the flash drive helps a lot initially. As the drive fills up performance is somewhat reduced. However, it does stabilize at a much higher level than the HDD. With the flash drive the server is pushing around 120Mbit whereas it only manages around half without dmcache enabled.

We're working together with a CDN customer to try to test this on a real world benchmark with VOD workloads. Hopefully we'll be able to provide some more insight on how these tests went. I'm expecting great results. Stay tuned.