This week's episode is about load balancing, which is a concept where traffic load gets distributed to a collection of underlying servers using an algorithm to ensure equal distribution.

In the case of Varnish, load balancing means Varnish can connect to multiple backends and distribute the backend fetches across them using a distribution algorithm. This is controlled by a specific module called VMOD directors, which is installed on every Varnish server and is in charge of load balancing.

You'll use directors VMOD for scalability, in cases of high load on the origin, potential traffic spikes, and also high availability in case the origin fails. It's a matter of having enough origin capacity to deal with the following use cases:

- Potential cache misses on long-tail content: This is content that is not always going to end up in the cache.

- Impact of uncatchable conflict: which is about not overwhelming the origin and having enough capacity

- Multi-tier varnish architectures: where one tier connects to the next, thanks to intelligent load balancing capabilities

- Planning for failure: load balancers will only select backends that are healthy

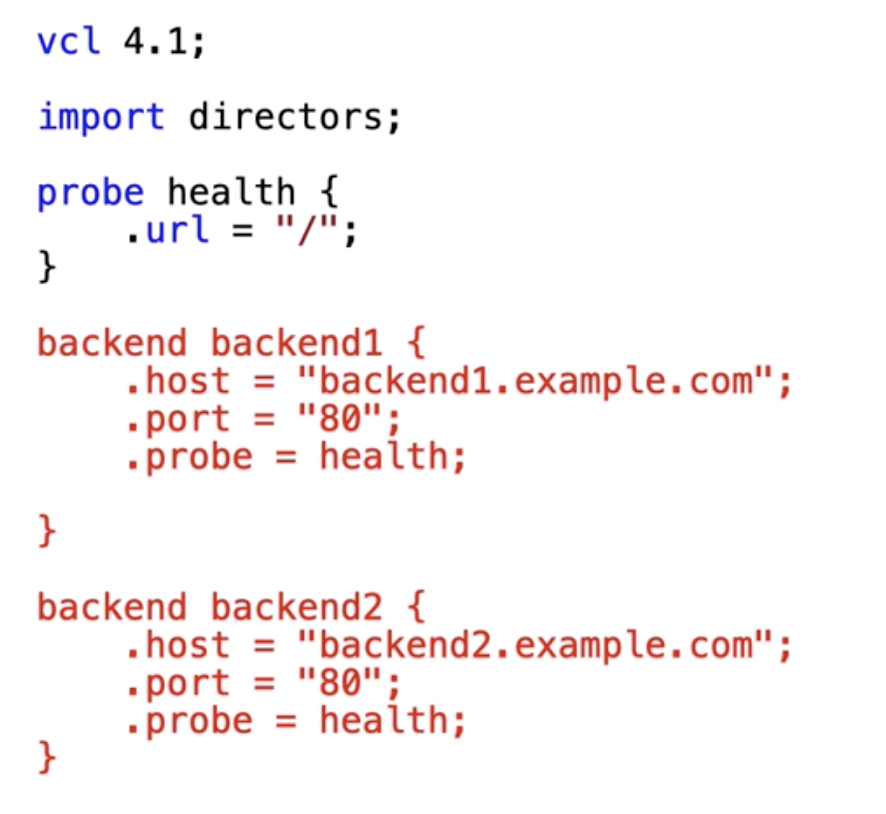

So let's talk about the implementation now, let's leverage the directors VMOD. It all starts when you import the directors VMOD in your VCL file. You need to predefine the backends you’ll load balance through, but in VCL init, you’ll have to assign a directors object:

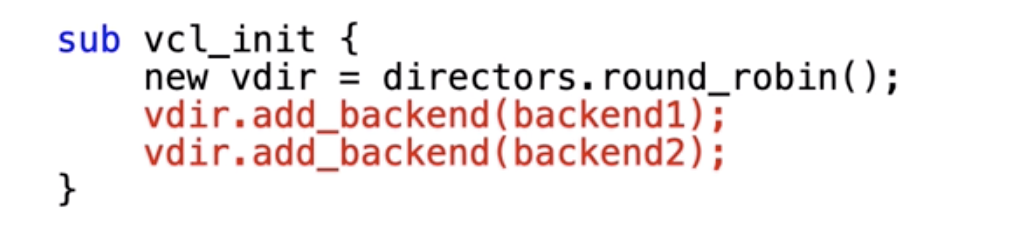

You do this through directors and then the function, and this function represents a distribution algorithm to use, for example, directors.round_robin. The backends that you add will be selected based on the distribution algorithm, once you call vdir.backend.

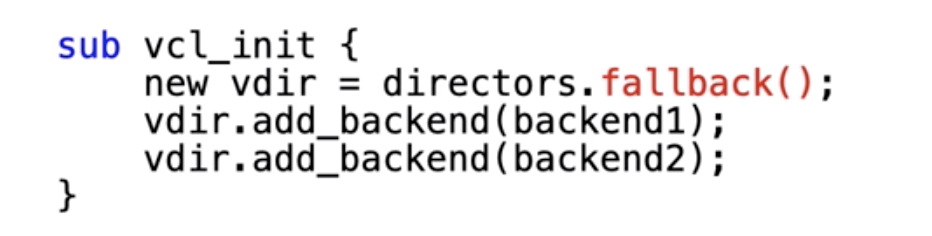

So in this case it's round-robin. This means first backend one, then backend two and then cycling through this. Of course, randomness can be applied as well, so you change it to directors.random, and you assign a way to control the randomness. Here's an example of the fallback director that is used for high availability:

Backend one will always be used, but if it fails backend two will be selected.

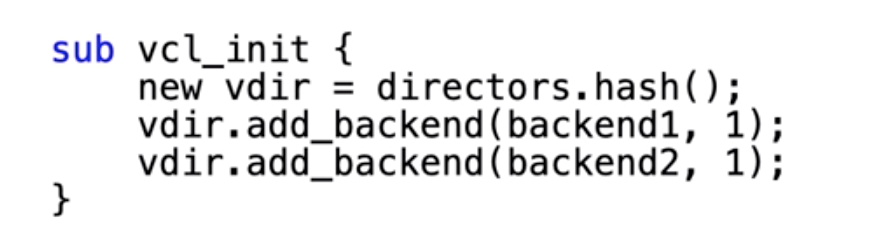

The final one we'll talk about today is hashing director, which creates affinity between a specific string value and a backend server, which means every request for this client will end up on the same node. We can change client IP into a request URL so this means there's an affinity between your request URL and the server. Basically, it could be any string.

To find out more about load balancing with Varnish, check out the following resources: