Parallel ESI is a performance-enhanced version of open source ESI. Parallel ESI issues all the ESI includes in parallel, upfront, so a single slow include does not slow down other operations. Open source ESI processes includes serially, one command at a time. If a single include is slow, the whole delivery pipeline is stalled.

Parallel ESI most benefits ESI segments that are not cacheable, not yet cached, or frequently updated ESI includes.

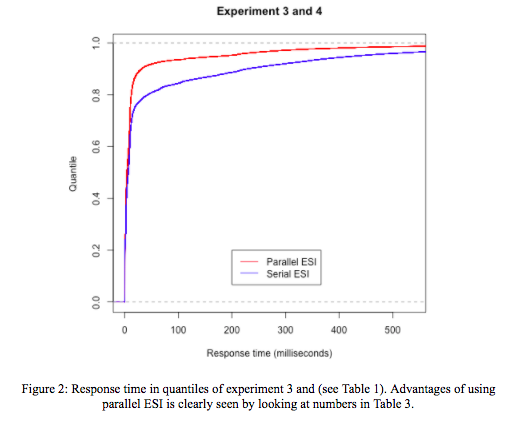

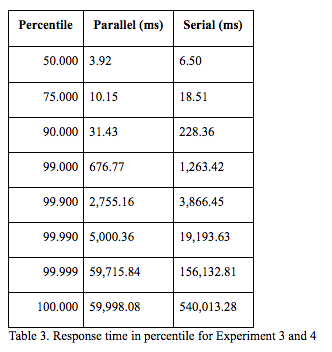

Results from experiments in controlled and real scenarios show a reduction of response time in the order of hundreds of milliseconds. These measurements include a mix of all factors that impact the performance of ESI processing in Varnish.

To get an idea of the importance of a reduction in response time in the order of hundreds of milliseconds, remember that Google search presents first those pages that load fastest, and recent studies suggest that users tolerate only up to about 2 seconds before quality of experience decreases or before they leave a page entirely.

Goals and testbed

The goal of this benchmark is to compare the performance of parallel and serial ESI. The testbed consists of two computers running a server-client application.

Computer A acts as a server, and runs:

- Varnish

- for serial ESI: Varnish Cache Plus varnish-plus-4.1.4r2-beta1 revision 48ecb09, or

- for parallel ESI: Varnish Cache Plus varnish-plus-4.1.4r2-beta1 revision 4c2f468f from branch parallel_esi

- NGINX as backend server

- PHP-FPM (FastCGI Process Manager) to introduce resource processing latency at the backend

Computer B acts as a client, and runs

- wrk2 from GitHub commit c4250acb

The service offered by the testbed is the delivery of well-formed web pages. The web pages are built in Varnish using different ESI templates. These ESI templates are listed in the section below called "Design experiments".

The main performance metric presented in this blog post for this benchmark is the response time at the client, i.e., time of last received byte. In addition to the measured response time, results show differences in CPU time utilization per request in the order of microseconds.

If you are interested in the full details of the testbed parameters, please do not hesitate to contact us.

Metrics

- Response time at the client, i.e., time to last received byte

- CPU process time per request in Varnish

Design experiments

We have evaluated the testbed, and data from real clients in a service deployment in production (for confidentiality reasons, we cannot name the service provider). In order to introduce processing latency at the backend in the testbed, the ESI pages are PHP scripts with explicit delays.

In the testbed, response time is measured with the client simulator wrk2, and CPU time is measured with the Linux kernel feature cgroups. In the deployment in production, response time is obtained by parsing Varnish logs.

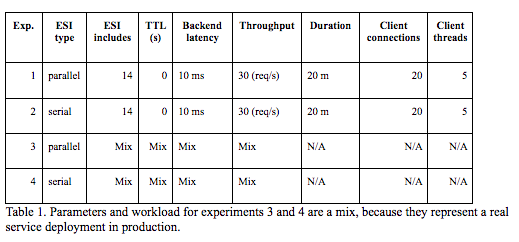

All experiments are preceded by a 10 to 20 second warm-up phase. The experiments are summarized in Table 1.

The values of parameters and workload in the experiments are selected to show the behavior of parallel and serial ESI. These values avoid creating a bottleneck at the backend.

Results

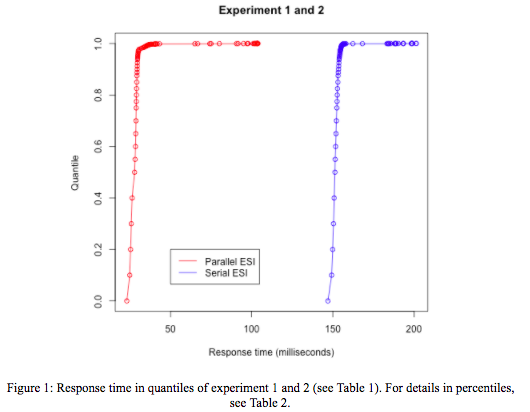

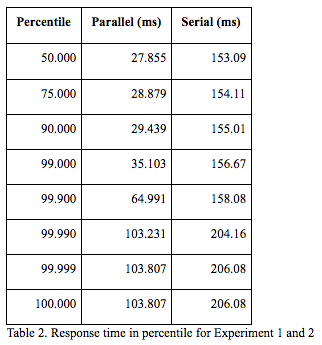

Response time for client requests is presented in quantiles. The shorter the response time, the better the performance. The average CPU process time per request in Varnish during controlled Experiments 1 and 2 is 7.519 and 7.601 ms respectively.

Conclusion

The performance of ESI depends on number of factors, namely ESI includes, ESI nesting, cache policy per ESI include, and the processing latency at the backend. The gain from using parallel ESI over serial ESI depends on the configuration of these factors.

In this benchmark, we have varied those factors and measured the total response time to receive pages formed with ESI includes. Measurements show that response time varies from tenths to thousands of milliseconds.

Results from experiments in a real scenario show an advantageous decrease of response time in all percentile measurements. This real scenario includes a mix of all factors that impact the performance of ESI processing in Varnish.

For further reading, we recommend:

Parallel ESI is part of Varnish Plus. If you are a Varnish Plus customer, you are already benefiting from it. If not, we invite you to give it a try - request a free Varnish Plus trial.