According to a Cisco forecast, by 2020 global mobile traffic will bypass desktop traffic, projected to reach 30.6 exabytes, approximately 30 billion gigabytes.

Desktop connections are fast and reliable and we, as users, are used to this level of speed and performance. Naturally, we expect to get the same from a mobile connection.

On the top of this we need to add the fact that the average web page size has increased from 700KB in 2010 up to 2509KB in 2016.

Overall this means we are constantly trying to push more data over the same exact mobile infrastructures we had in 2010… and this of course leads to poor mobile performance.

We, as website developers or sysadmins, can’t change, modify or improve the whole infrastructure used to provide connectivity by mobile carriers, but we can definitely improve mobile content delivery using software solutions.

As of today, caching has become de facto best practice, and I’m going to assume each of you reading this blog post has implemented caching for both desktop and mobile versions of your website. Caching boosts your content delivery performance, but there are at least three other components that can help make your mobile website even faster:

SSL/TLS

Suggesting SSL/TLS as a possible solution is probably the most controversial suggestion I can make. SSL/TLS is a hot topic at the moment; everyone out there in the magic world of the internet is talking about it and slowly websites are shifting to https only.

There is a good reason behind the assertion: encrypted data goes faster on mobile connections than unencrypted data.

Why? Mobile carriers use their own proxies to reduce bandwidth usage in their network; unencrypted data will be filtered by those proxies, thereby adding some extra latency, while encrypted traffic will bypass these proxies providing a faster experience for the end user.

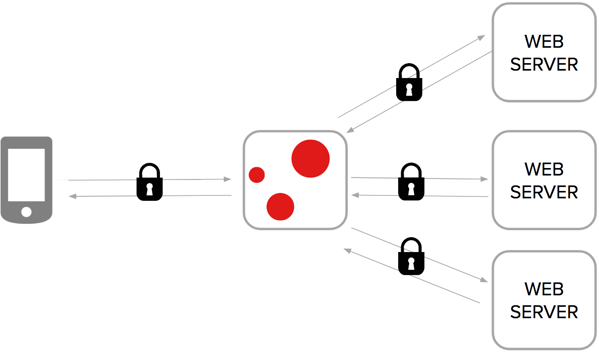

With Varnish Plus you can implement SSL/TLS both on the client and backend side of your architecture. On the client side you can use an SSL terminator called Hitch, which is an open source project fully supported by Varnish Software, while on the backend side implementing secure connections is just a matter of setting the secure mode on/off.

On the client side you can use an SSL terminator called Hitch, which is an open source project fully supported by Varnish Software, while on the backend side implementing secure connections is just a matter of setting the secure mode on/off.

PARALLEL ESI

Both Varnish Cache and Varnish Plus support ESI (Edge Side Includes), but they process ESI includes in a two completely different ways.

Varnish Cache handles a single ESI include at time, meaning that if you have 4 ESI includes to be fetched from backend it will take 4 backend fetches to collect all the content needed by Varnish Cache to fulfill the client request.

Varnish Plus instead, for the same scenario, will issue a single backend request to fetch 4 ESI includes in parallel providing a huge performance boost.

Processing ESI includes using parallel fetches reduces the time to first byte up to 50%, providing an improved and more personalized experience for the user.

Parallel ESI is part of the latest Varnish Plus release and doesn’t require any configuration - it works out of the box.

EDGESTASH

Edgestash is a Mustache-inspired templating language and moves assembly logic from the client side to the edge.

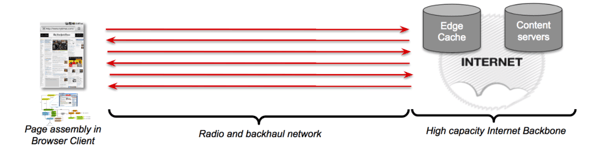

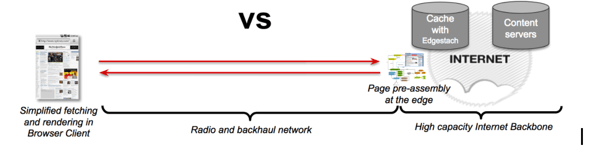

Whenever a web browser issues a request for a specific web page, it will take several round trips and some bandwidth before the browser collects all the necessary material (we are talking about templating, JavaScript libraries, JSON data and more) to render the page. Edgestash moves this burden to the edge of your whole architecture, assembling and fetching content to pre-render the page. This reduces the number of roundtrips and the bandwidth used, providing a faster experience.

Without Edgestash:

With Edgestash:

Let’s have a look at how Edgestash works:

server s1 {

rxreq

txresp -body "Hello, !"

expect req.url == "/page.es"

rxreq

txresp -body {

{

"last": "Foobar",

"first": "Chris"

}

}

expect req.url == "/page.json"

} -start

varnish v1 -vcl+backend {

import edgestash from "${topbuild}/lib/libvmod_edgestash/.libs/libvmod_edgestash.so";

sub vcl_backend_response {

if (bereq.url ~ "\.es$") {

edgestash.parse_response();

} else if (bereq.url ~ "\.json$") {

edgestash.index_json();

}

}

sub vcl_deliver {

if (req.url ~ "\.es$") {

edgestash.execute(regsub(req.url, "\.es$", ".json"));

}

}

} -start

client c1 {

txreq -url "/page.es"

rxresp

expect resp.status == 200

expect resp.body == "Hello, Chris Foobar!"

} -run

In this very simple example the client C1 sends a request for the URL “/page.es”, Varnish Plus fetches “-body "Hello, !"” from the backend and parses the response using “edgestash.parse_response()”.

In vcl_deliver, Varnish calls “edgestash.execute(regsub(req.url, "\.es$", ".json"))”, which triggers a new backend fetch to get the JSON file, which will be used to complete the Mustache templating.

The final result will be “Hello, Chris Foobar!” as the response body.

As you can see Varnish Plus combined with Edgestash handles the whole pre-rendering of the page.

Edgestash is currently being tested and will be part of the next Varnish Plus release.

These three features combined with caching will help you to improve your mobile content delivery performance and provide a better and more personalized user experience.

If you want to hear more about this, watch our recent webinar on-demand all about mobile optimization.