As a Solutions Architect, Web Developer or Performance Engineer, you have many ways to deliver web pages, applications and content over HTTP/S. But as you are aware, the level of complexity exponentially grows as the type of data, data source, clients, and client locations increase while performance, reliability and budget requirements become more stringent. Two of the solutions, both available as open source and commercial software, employed by many to solve these challenges are NGINX and Varnish. There are various resources available that cover the differences, however, many are written from a very specific web serving point of view. This article will briefly define the differences between both solutions then define the 1) underlying architectural approaches and their benefits, 2) ideal characteristics of cache-centric use cases, and 3) the future of cache, content and edge delivery.

NGINX Started as a Web Server

NGINX is an Open Source and Commercial software-based web server that can be used as a load balancer, mail proxy, reverse proxy and HTTP cache. Despite its roots in web serving, NGINX is extremely versatile delivering various protocols and as a result supports a broad range of use cases. However, the main use case for NGINX is still being a web server.

Varnish Started as a Web Accelerator/Cache

Varnish is an Open Source and Commercial software-based HTTP accelerator that can be used as an HTTP cache, content router, content delivery solution and runtime environment at the edge of an internal or external network. Its roots as a proxy make it highly customizable and extremely efficient for delivering any type of content over HTTP/S.

As with any job, using the right tool will significantly impact the ease and ability to achieve your objectives and goals. As mentioned in the introduction, many NGINX vs Varnish comparisons on the internet take a general “web-serving” view. However, NGINX tends to deviate from its roots as a web server, offering a broader set of features including an HTTP cache. But it is an add-on and limited in functionality compared to a purpose-built solution like Varnish. The reason lies in the underlying architecture of each solution and the evolving needs of high concurrency, high throughput and low latency use cases. This is why many popular sites and web services in the world use NGINX and Varnish together.

Web Server Architecture Delivers Broad Feature Set, High Transaction Rate

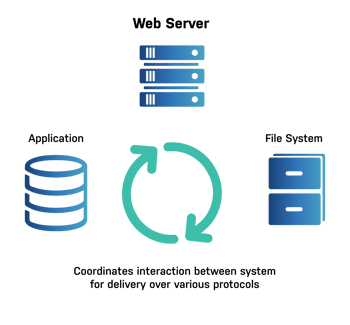

A web server, in this case NGINX, is where data originates. This is why it can also be called an origin server. NGINX works with the operating system and file system to find the requested segments of data, combine them and then package them up for delivery over the required protocol (HTTP/S, FTP, IMAP, POP3, SMTP…) using an asynchronous event-driven approach. Relying on the operating system page cache for memory management and file systems for I/O results in the ideal way to interface with application runtimes from programming languages like PHP and Python through the FastCGI protocol. But this interoperability comes at the cost of the ability to optimize memory management, storage, and customize edge side logic.

A web server, in this case NGINX, is where data originates. This is why it can also be called an origin server. NGINX works with the operating system and file system to find the requested segments of data, combine them and then package them up for delivery over the required protocol (HTTP/S, FTP, IMAP, POP3, SMTP…) using an asynchronous event-driven approach. Relying on the operating system page cache for memory management and file systems for I/O results in the ideal way to interface with application runtimes from programming languages like PHP and Python through the FastCGI protocol. But this interoperability comes at the cost of the ability to optimize memory management, storage, and customize edge side logic.

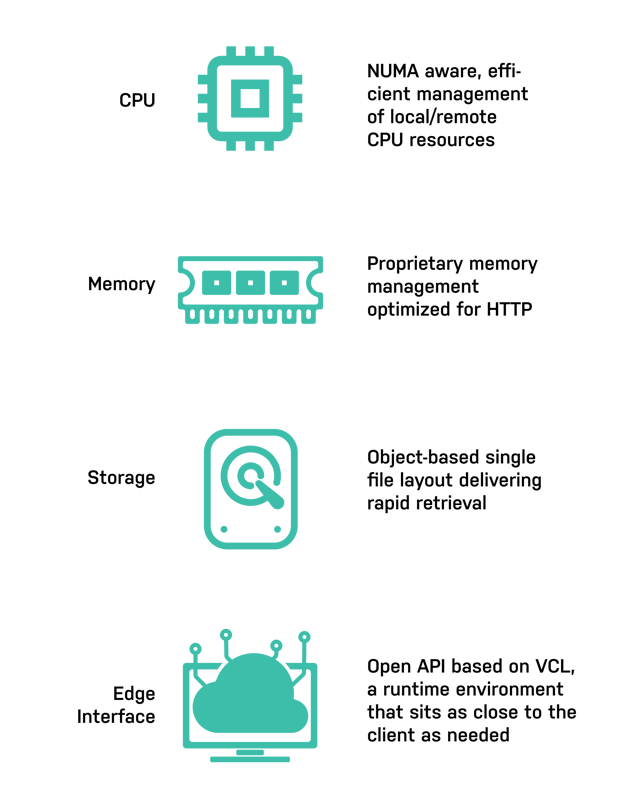

Web Accelerator, Caching Architecture Delivers Low Latency, High Throughput, Customizability

A web accelerator and cache, in this case Varnish, is a proxy by definition which means that it sits between the web server/origin server ultimately delivering the requested data to the client. In contrast to a web server, Varnish does its own memory management with a custom object-based layout of data and that utilizes threading to handle client requests. This results in very low latency (< 1 ms is possible) and 50% fewer copies of data in memory, when compared to a web server. This also results in the ability to optimize system level coordination of delivery of data from storage, memory, and specific network sockets and to run edge-side includes and logic.

A web accelerator and cache, in this case Varnish, is a proxy by definition which means that it sits between the web server/origin server ultimately delivering the requested data to the client. In contrast to a web server, Varnish does its own memory management with a custom object-based layout of data and that utilizes threading to handle client requests. This results in very low latency (< 1 ms is possible) and 50% fewer copies of data in memory, when compared to a web server. This also results in the ability to optimize system level coordination of delivery of data from storage, memory, and specific network sockets and to run edge-side includes and logic.

The result is the ability to optimize and approach near theoretical throughput limits of the underlying infrastructure while taking full advantage of power utilization efficiencies. In addition, caching logic can be customized and executed on the ‘edge’ of an internal or external network. This all, however, comes at the cost of the ability to handle broad web serving needs. Varnish chooses performance of features and continues to build upon its roots as a web accelerator and HTTP cache.

How Varnish Enables Low Latency, High Throughput and Customization

When a Purpose-Built Cache is Needed in Front of a Web Server

The ultimate goal of adding a web accelerator or cache is to leverage the aforementioned efficiency and low latency to deliver as much data as possible from the cache. This is called a “cache hit” vs a “cache miss” which needs to request and deliver data from the web server/origin server. A single request to the web server isn’t an issue but thousands and tens of thousands depending on their complexity can quickly use up web server and origin resources causing downtime and availability issues.

A difficult concept for most to measure is the complexity of building dynamic content from the subsystem level all the way to client use in a responsive and reliable way. The interconnectivity between the CPU, networking, operating system, file system, protocol, networking, digital delivery method and client rendering all need to work together. Imagine the resources required for a web server to serve a single request for dynamic pages to an application runtime over FastCGI for instance. These applications perform business logic, they interact with databases and other data sources, and consume a considerable amount of server resources (memory, CPU en disk I/O). These applications are rarely developed with scalability in mind. Now multiply this by a factor of 1,000 or 10,000 and you can see the need for a cache.

This need to reach new levels of throughput, customize cache logic, deliver content and run logic at the edge is the reason that Varnish is often used instead of NGINX cache. The next blog in this series will dive deeper into these concepts and the resulting characteristics of ideal cache-centric use cases.