When delivering video over-the-top (OTT), the internet is the principal highway for distributing this content. However, OTT streaming delivery requires something faster than what the internet offers in terms of how chunks/fragments are supposed to flow. Currently, publicly available wifi hotspots are the preferred networks for video consumption, but poor network infrastructure also leads to unbearable video buffering and latency.

A congested network slows down response times, and directly affects the quality of service and experience of your video applications and services. Most video consumers have experienced - and not at all enjoyed - this aspect of video streaming.

The challenge, then, is to find ways to improve response time over congested networks, as the amount of traffic is only going to increase.

Varnish and Bottleneck Bandwidth and Round-trip (BBR)

Varnish is ideal for video delivery, ensuring high-performance HTTP delivery over video streaming, making up part of your media caching strategy through reliable and scalable architecture for heavy video content distribution. Naturally it helps if the network is in good shape, but if it isn’t the client response time suffers, and some mitigation will be required to minimize latency coming from TCP/IP layers.

Varnish allows for more in-depth configurations via a Varnish module (vmod-tcp), minimizing the impact of a congested network by choosing the suitable TCP/IP control algorithms proposed and developed over the years and released mostly on top of Linux operating system kernels. But with BBR we can go beyond this.

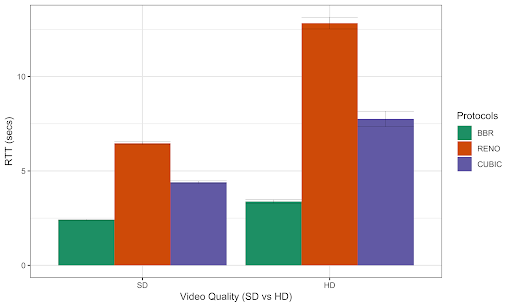

Results from a real OTT video scenario show a significant reduction in the response time from both SD (standard definition) and HD (high definition) video qualities. The combined power enabling Varnish and BBR indicates demonstrable latency reduction in delivering video chunks over congested networks.

What is BBR?

BBR ("Bottleneck Bandwidth and Round-trip propagation time") is a new network congestion algorithm developed by Google designed to respond quickly to actual network congestion, resulting in a fair utilization of network bandwidth available with more accurate TCP/IP end-to-end congestion control decisions.

BBR relies on a network's delivery rate (maximum recent bandwidth available) and minimum round-trip-time delay in order to decide how fast to send data over the network, minimizing bufferbloat effects during the content delivery process (learn more about BBR and bufferbloat).

How easy is it to enable BBR in Varnish?

BBR is easy to deploy and enable in VCL territory. BBR only requires updates on the server side, and it doesn't require video clients/players to have any special support. BBR is available if you’re using a 4.10+ Linux kernel distribution mostly for Ubuntu and Debian; otherwise a kernel update will be required (see how to install BBR on CentOS 7).

To check which flavors are available in your Linux server distribution, you just need to type in a Linux terminal:

sudo sysctl net.ipv4.tcp_available_congestion_control

Example output:

net.ipv4.tcp_available_congestion_control = reno cubic bbr

Using BBR In VCL territory

vcl 4.1;

import tcp;

backend default { .host = "127.0.0.1"; .port = "8080"; }

sub vcl_recv

{

#writes TCP_INFO into varnishlog.

tcp.dump_info();

#enables BBR in your VCL.

set req.http.x-tcp = tcp.congestion_algorithm("bbr");

}

Note that you can also play around with different delays toward a more dynamic PACE and switch between different congestion control protocols (see vmod-tcp docs for more details).

Checking if BBR is enabled into varnishlog

- VCL_Log tcpi: snd_mss=1448 rcv_mss=536 lost=0 retrans=0

- VCL_Log tcpi2: pmtu=1500 rtt=12042 rttvar=6021 snd_cwnd=10 advmss=1448 reordering=3

- VCL_Log getsockopt() returned: bbr

How about performance improvements?

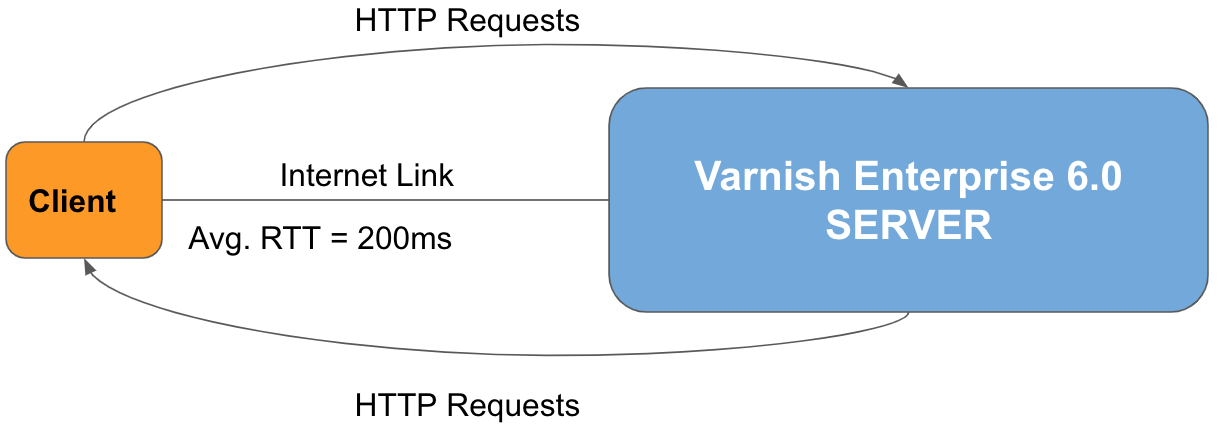

Now that we know how easy is to enable BBR in your VCL, let’s analyze some promising BBR results against the CUBIC and RENO standards. The evaluation scenario is composed of a client and server: the client is responsible for consuming different video chunks over the internet, and Varnish acts as origin protection in this case, serving video chunks directly from the edge.

Client (CPU: 2.2 GHz Intel Core i7):

- Is connected in a wifi hotspot and performs requests to one of our Varnish Enterprise servers, running Varnish Enterprise Version 6.0.

- Requests 2 different chunks size: 1.2 MB (SD) and 5MB (HD) over Mpeg-Dash (.m4s) ABR technology.

- On top of client-to-server communication, an internet link latency is added (200ms).

Varnish Enterprise 6.0 Server (CPU: Intel Gold 6140 @ 2.30GHz):

- Varnish-plus-6.0.5r1 running at Centos 7.5 (5.3.8-1.el7.elrepo.x86_64 kernel version).

- Runs VCL (Using BBR In VCL territory) that enables switching between different TCP/IP congestion control protocols.

- BBR, RENO and CUBIC were the set of congestion protocols evaluated.

- Tested using FQ Fair Queue traffic policing enabled.

(100 samples were taken for each protocol with 95% Confidence Interval)

Varnish and BBR presents greater stability and reduces the request latency times for SD (chunk size ~ 1.2MB) and HD (chunk size ~ 5MB) video chunks by two to three times. The comparison results clearly state the advantage of using Varnish and BBR (best case) enabled in the VCL in comparison to Varnish and RENO (worst case) and Varnish and CUBIC (acceptable case). The results enable us to conclude that using Varnish and BBR helps to reduce latencies over congested networks in OTT video streaming scenarios.

Minimize latency - improve video QoE

Varnish and BBR together are a great combination proven to minimize unexpected video latency and to increase the quality of experience for video consumers with less buffering, especially for OTT video streaming delivery that relies on the client’s internet traffic for video edge delivery. Many Varnish customers have experienced this performance boost.

Varnish and BBR for OTT video use cases might be right for your video infrastructure; feel free to get in touch with us to evaluate your case. Here we have used the Varnish module vmod-tcp, which is a part of the Varnish Enterprise module family (see VMODS). If you are a Varnish Enterprise customer, you are already benefiting from it, and can simply “import tcp;” module in your VCL. If you’re not already a Varnish Enterprise customer, here are some of our recommended readings, or you can always contact us to speak to an expert:

- Varnish Enterprise Streaming Server

- Three features that make Varnish ideal for video streaming

- Massive Storage Engine for caching huge video-on-demand (VoD) catalogs.

Note: The “initcwnd” initial congestion window (see Initcwnd settings of major CDN providers) parameter may help to increase performance as well. For now, we have focused on measuring Varnish and BBR without tuning any TCP/IP and kernel parameters. We would welcome hearing about your experiences in this area.

Stay tuned, as this is the first in a series of blog posts tackling OTT video performance achievements including low latency ABR (adaptive bitrate) video technologies with SSL/TLS security transmissions.